Project Team ID = PTID-CDS-JUL21-1171 (Members - Diana, Hema, Pavithra and Sophiya)

Project ID = PRCL-0017 Customer Churn Business case

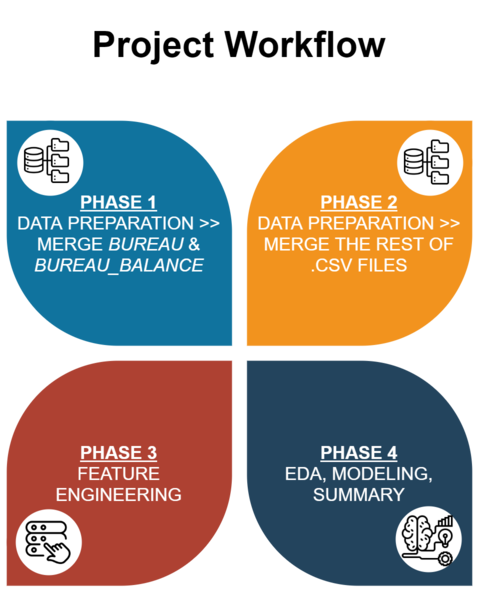

Due to the immensely colossal dataset, the whole process is divided into 4 Colabs notebooks (generally speaking),

the rest of it was just additional phase to compare models after reduce 4 features (on Phase 5, which refers to highly correlated to each other).

Phases Outline

Due to the immensely colossal datasets, we were facing difficulties in executing all the commands in a single notebook, so we are utilizing 4 Colab notebooks each executing a particular Phase of the project as noted below:

- Phase 1 → Done in the first notebook to load and merge the bureau and bureau_Balance datasets and then after Data Preparation exporting the merged dataset to GDrive.

- Phase 2 → Load the rest of datasets and then merged dataset from previous phase. After data preparation, merges all the datasets and exports the dataset to GDrive.

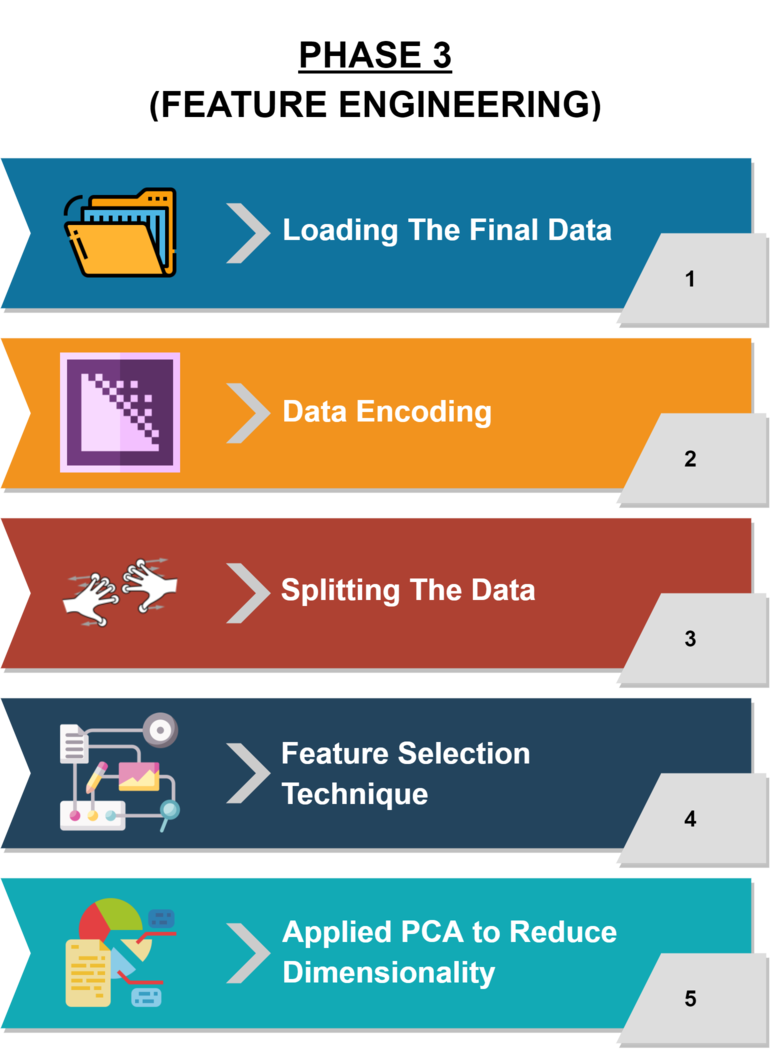

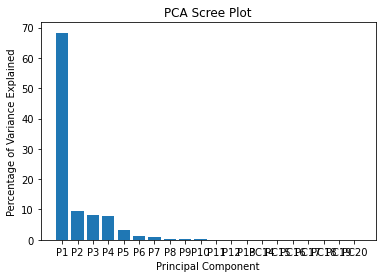

- Phase 3 → Do a PCA for the final merged dataset and find the consequential features that are utilizable for modeling, then export that dataset to GDrive.

- Phase 4 → Load the final dataset, EDA, Modeling, and Summary.

- [Optional] Phase 5 → Additional phase to compare models after reduce 4 features

(refers to highly correlated to each other).

—— Preliminary → Identify The Business Case ——

This is a Home Loan Default Data which contains multiple databases and sources to predict how capable each loan applicant is competent in repaying the loan.

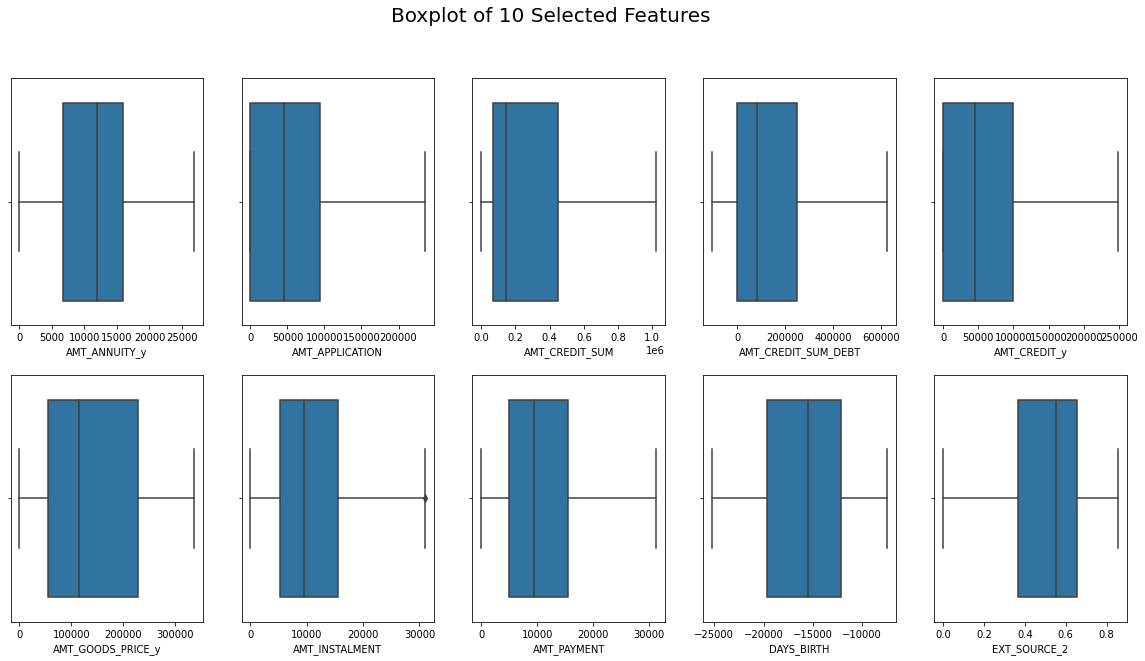

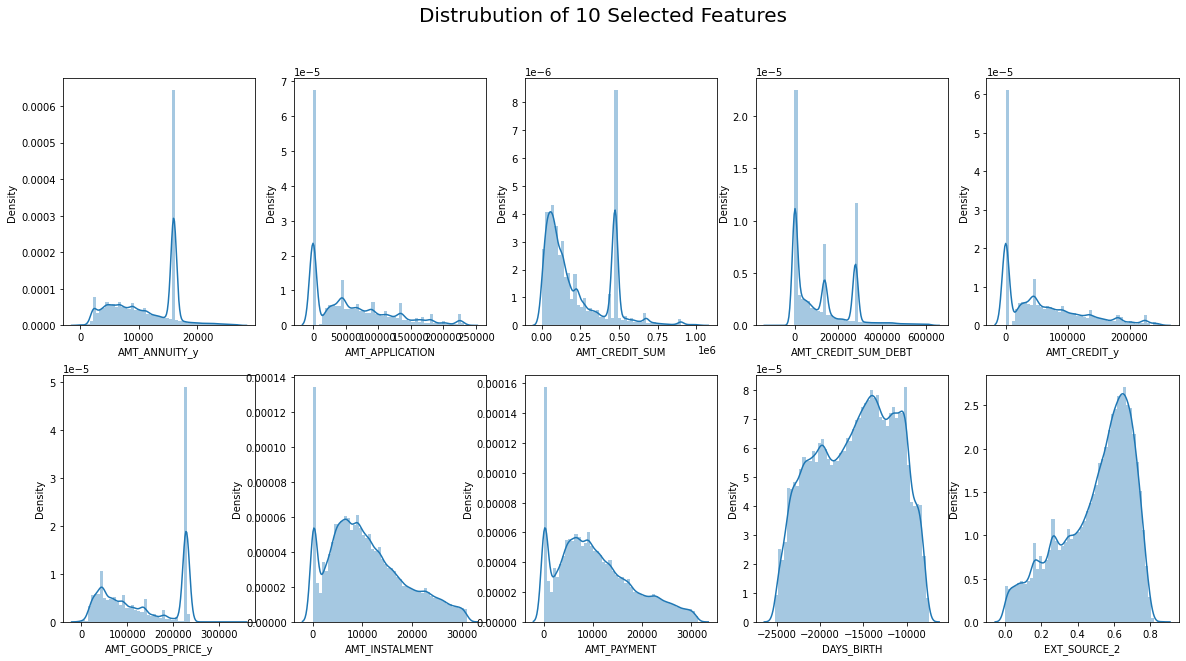

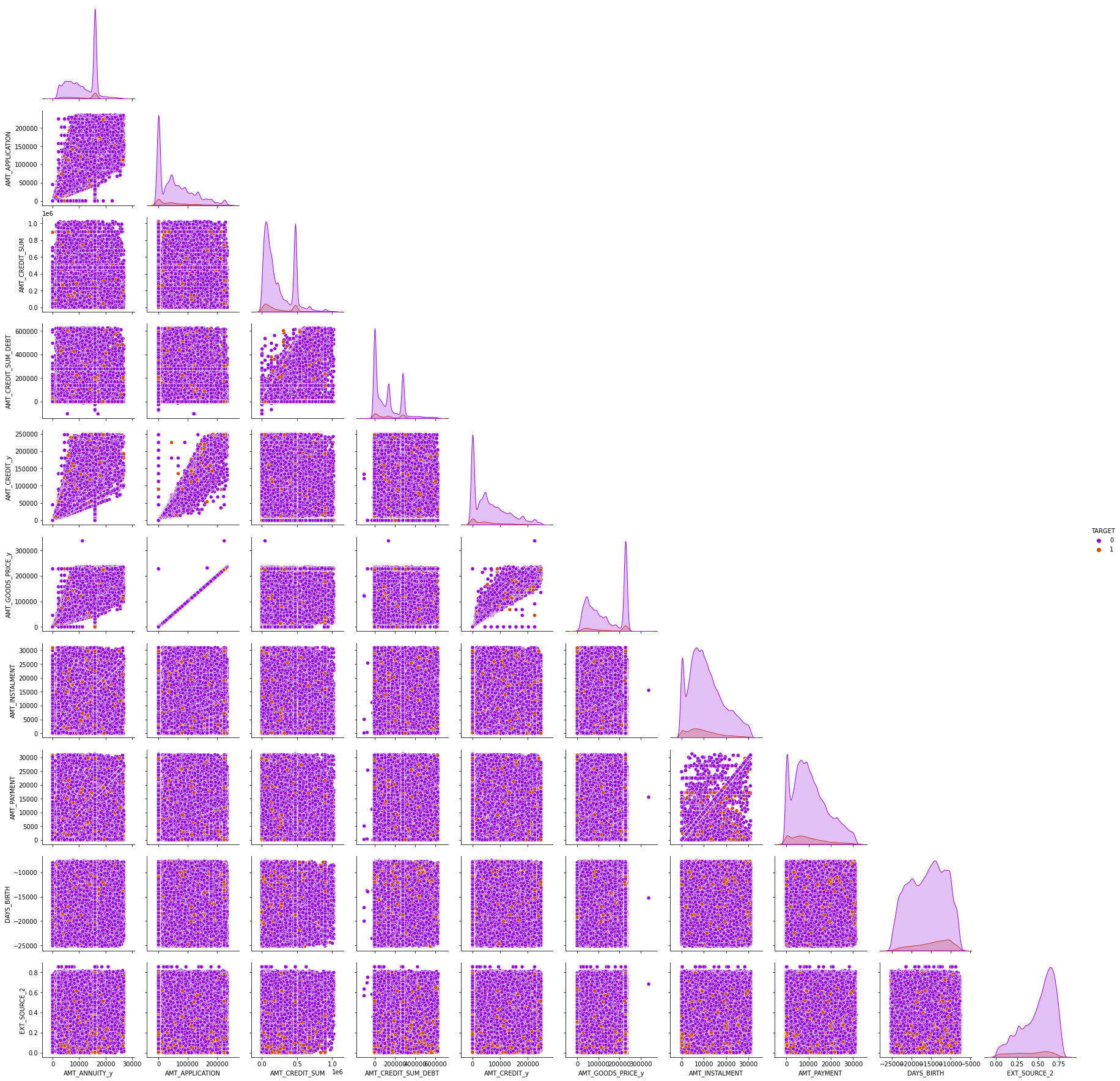

The target is to predict their clients repayment abilities.Doing so will ensure that clients capable of repayment are not rejected and that loans are given with a principal, maturity, and repayment calendar that will empower their clients to be successful. Consequently, in order to avoid ‘the curse of dimensionality’, we’re gonna involve the top 10 of most influence features and will involve it to be a part of prediction journey (passing 10 selected features into X).

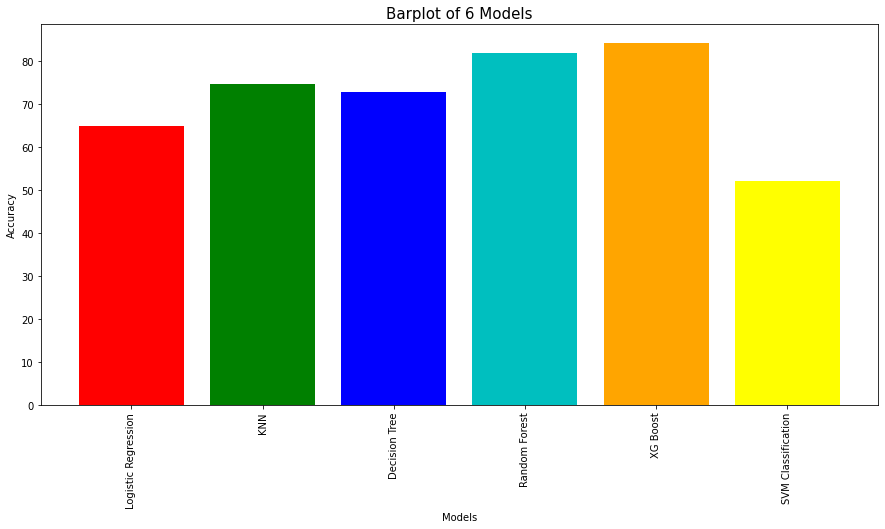

The most opportune method to solve this case is by applying classification(Logistic Regression, KNN, Decision Tree, Random Forest, XG Boost, SVM Classification).

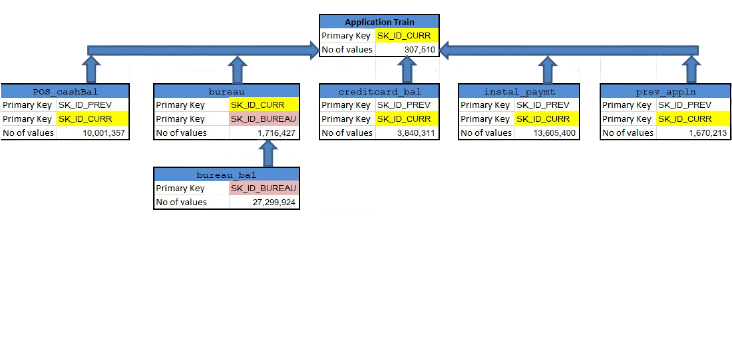

There are 7 databases that provide data for this project so analysing the databases in 7 steps, given by the workflow below:

Image Credit

import numpy as np

%matplotlib inline

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings("ignore")

pd.set_option('display.max_columns',999) #set column display number

pd.set_option('display.max_rows',200) #set row display number

pd.set_option('float_format', '{:f}'.format) #set float format

from google.colab import drive

drive.mount('/content/grive')

Drive already mounted at /content/grive; to attempt to forcibly remount, call drive.mount("/content/grive", force_remount=True).

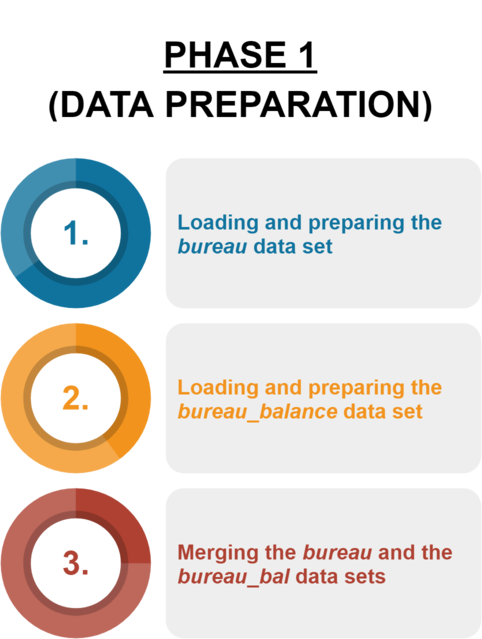

STEP 1: Loading and preparing the bureau data set

bureau = pd.read_csv('/content/grive/MyDrive/HomeLoanDefault/bureau.csv')

bureau.head()

| SK_ID_CURR | SK_ID_BUREAU | CREDIT_ACTIVE | CREDIT_CURRENCY | DAYS_CREDIT | CREDIT_DAY_OVERDUE | DAYS_CREDIT_ENDDATE | DAYS_ENDDATE_FACT | AMT_CREDIT_MAX_OVERDUE | CNT_CREDIT_PROLONG | AMT_CREDIT_SUM | AMT_CREDIT_SUM_DEBT | AMT_CREDIT_SUM_LIMIT | AMT_CREDIT_SUM_OVERDUE | CREDIT_TYPE | DAYS_CREDIT_UPDATE | AMT_ANNUITY | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 215354 | 5714462 | Closed | currency 1 | -497 | 0 | -153.000000 | -153.000000 | nan | 0 | 91323.000000 | 0.000000 | nan | 0.000000 | Consumer credit | -131 | nan |

| 1 | 215354 | 5714463 | Active | currency 1 | -208 | 0 | 1075.000000 | nan | nan | 0 | 225000.000000 | 171342.000000 | nan | 0.000000 | Credit card | -20 | nan |

| 2 | 215354 | 5714464 | Active | currency 1 | -203 | 0 | 528.000000 | nan | nan | 0 | 464323.500000 | nan | nan | 0.000000 | Consumer credit | -16 | nan |

| 3 | 215354 | 5714465 | Active | currency 1 | -203 | 0 | nan | nan | nan | 0 | 90000.000000 | nan | nan | 0.000000 | Credit card | -16 | nan |

| 4 | 215354 | 5714466 | Active | currency 1 | -629 | 0 | 1197.000000 | nan | 77674.500000 | 0 | 2700000.000000 | nan | nan | 0.000000 | Consumer credit | -21 | nan |

bureau.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1716428 entries, 0 to 1716427

Data columns (total 17 columns):

# Column Dtype

--- ------ -----

0 SK_ID_CURR int64

1 SK_ID_BUREAU int64

2 CREDIT_ACTIVE object

3 CREDIT_CURRENCY object

4 DAYS_CREDIT int64

5 CREDIT_DAY_OVERDUE int64

6 DAYS_CREDIT_ENDDATE float64

7 DAYS_ENDDATE_FACT float64

8 AMT_CREDIT_MAX_OVERDUE float64

9 CNT_CREDIT_PROLONG int64

10 AMT_CREDIT_SUM float64

11 AMT_CREDIT_SUM_DEBT float64

12 AMT_CREDIT_SUM_LIMIT float64

13 AMT_CREDIT_SUM_OVERDUE float64

14 CREDIT_TYPE object

15 DAYS_CREDIT_UPDATE int64

16 AMT_ANNUITY float64

dtypes: float64(8), int64(6), object(3)

memory usage: 222.6+ MB

Description of The Dataset:

SK_ID_CURR → ID of loan in our sample (one loan in our sample can have 0,1,2 or more related previous credits in credit bureau).

SK_ID_BUREAU → Recoded ID of previous Credit Bureau credit related to our loan (unique coding for each loan application).

CREDIT_ACTIVE → Status of the Credit Bureau (CB) reported credits.

CREDIT_CURRENCY → Recoded currency of the Credit Bureau credit.

DAYS_CREDIT → How many days before current application did client apply for Credit Bureau credit.

CREDIT_DAY_OVERDUE → Number of days past due on CB credit at the time of application for related loan in our sample.

DAYS_CREDIT_ENDDATE → Remaining duration of CB credit (in days) at the time of application in Home Credit.

DAYS_ENDDATE_FACT → Days since CB credit ended at the time of application in Home Credit (only for closed credit).

AMT_CREDIT_MAX_OVERDUE → Maximal amount overdue on the Credit Bureau credit so far (at application date of loan in our sample).

CNT_CREDIT_PROLONG → How many times was the Credit Bureau credit prolonged.

AMT_CREDIT_SUM → Current credit amount for the Credit Bureau credit.

AMT_CREDIT_SUM_DEBT → Current debt on Credit Bureau credit.

AMT_CREDIT_SUM_LIMIT → Current credit limit of credit card reported in Credit Bureau.

AMT_CREDIT_SUM_OVERDUE → Current amount overdue on Credit Bureau credit.

CREDIT_TYPE → Type of Credit Bureau credit (Car, cash,…).

DAYS_CREDIT_UPDATE → How many days before loan application did last information about the Credit Bureau credit come.

AMT_ANNUITY → Loan annuity.

# checking if the columns have null values

bureau.isnull().sum()

SK_ID_CURR 0

SK_ID_BUREAU 0

CREDIT_ACTIVE 0

CREDIT_CURRENCY 0

DAYS_CREDIT 0

CREDIT_DAY_OVERDUE 0

DAYS_CREDIT_ENDDATE 105553

DAYS_ENDDATE_FACT 633653

AMT_CREDIT_MAX_OVERDUE 1124488

CNT_CREDIT_PROLONG 0

AMT_CREDIT_SUM 13

AMT_CREDIT_SUM_DEBT 257669

AMT_CREDIT_SUM_LIMIT 591780

AMT_CREDIT_SUM_OVERDUE 0

CREDIT_TYPE 0

DAYS_CREDIT_UPDATE 0

AMT_ANNUITY 1226791

dtype: int64

# Finding the % of missing values in each column

round(100*(bureau.isnull().sum()/len(bureau.index)),2)

SK_ID_CURR 0.000000

SK_ID_BUREAU 0.000000

CREDIT_ACTIVE 0.000000

CREDIT_CURRENCY 0.000000

DAYS_CREDIT 0.000000

CREDIT_DAY_OVERDUE 0.000000

DAYS_CREDIT_ENDDATE 6.150000

DAYS_ENDDATE_FACT 36.920000

AMT_CREDIT_MAX_OVERDUE 65.510000

CNT_CREDIT_PROLONG 0.000000

AMT_CREDIT_SUM 0.000000

AMT_CREDIT_SUM_DEBT 15.010000

AMT_CREDIT_SUM_LIMIT 34.480000

AMT_CREDIT_SUM_OVERDUE 0.000000

CREDIT_TYPE 0.000000

DAYS_CREDIT_UPDATE 0.000000

AMT_ANNUITY 71.470000

dtype: float64

#Assigning NULL percentage value to a variable

bur_null = round(100*(bureau.isnull().sum()/len(bureau.index)),2)

# find columns with more than 50% missing values

colBur = bur_null[bur_null >= 50].index

# drop columns with high null percentage

bureau.drop(colBur,axis = 1,inplace = True)

#check null percentage after dropping

round(100*(bureau.isnull().sum()/len(bureau.index)),2)

SK_ID_CURR 0.000000

SK_ID_BUREAU 0.000000

CREDIT_ACTIVE 0.000000

CREDIT_CURRENCY 0.000000

DAYS_CREDIT 0.000000

CREDIT_DAY_OVERDUE 0.000000

DAYS_CREDIT_ENDDATE 6.150000

DAYS_ENDDATE_FACT 36.920000

CNT_CREDIT_PROLONG 0.000000

AMT_CREDIT_SUM 0.000000

AMT_CREDIT_SUM_DEBT 15.010000

AMT_CREDIT_SUM_LIMIT 34.480000

AMT_CREDIT_SUM_OVERDUE 0.000000

CREDIT_TYPE 0.000000

DAYS_CREDIT_UPDATE 0.000000

dtype: float64

# checking the shape after dropping

bureau.shape

(1716428, 15)

Remarks → 2 columns were dropped (they had > 50% of missing values.)

# checking the description

bureau.describe().T

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| SK_ID_CURR | 1716428.000000 | 278214.933645 | 102938.558112 | 100001.000000 | 188866.750000 | 278055.000000 | 367426.000000 | 456255.000000 |

| SK_ID_BUREAU | 1716428.000000 | 5924434.489032 | 532265.728552 | 5000000.000000 | 5463953.750000 | 5926303.500000 | 6385681.250000 | 6843457.000000 |

| DAYS_CREDIT | 1716428.000000 | -1142.107685 | 795.164928 | -2922.000000 | -1666.000000 | -987.000000 | -474.000000 | 0.000000 |

| CREDIT_DAY_OVERDUE | 1716428.000000 | 0.818167 | 36.544428 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 2792.000000 |

| DAYS_CREDIT_ENDDATE | 1610875.000000 | 510.517362 | 4994.219837 | -42060.000000 | -1138.000000 | -330.000000 | 474.000000 | 31199.000000 |

| DAYS_ENDDATE_FACT | 1082775.000000 | -1017.437148 | 714.010626 | -42023.000000 | -1489.000000 | -897.000000 | -425.000000 | 0.000000 |

| CNT_CREDIT_PROLONG | 1716428.000000 | 0.006410 | 0.096224 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 9.000000 |

| AMT_CREDIT_SUM | 1716415.000000 | 354994.591918 | 1149811.343980 | 0.000000 | 51300.000000 | 125518.500000 | 315000.000000 | 585000000.000000 |

| AMT_CREDIT_SUM_DEBT | 1458759.000000 | 137085.119952 | 677401.130952 | -4705600.320000 | 0.000000 | 0.000000 | 40153.500000 | 170100000.000000 |

| AMT_CREDIT_SUM_LIMIT | 1124648.000000 | 6229.514980 | 45032.031476 | -586406.115000 | 0.000000 | 0.000000 | 0.000000 | 4705600.320000 |

| AMT_CREDIT_SUM_OVERDUE | 1716428.000000 | 37.912758 | 5937.650035 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 3756681.000000 |

| DAYS_CREDIT_UPDATE | 1716428.000000 | -593.748320 | 720.747312 | -41947.000000 | -908.000000 | -395.000000 | -33.000000 | 372.000000 |

Days credit, Days Credit End date, Days Enddate Fact, amt credit sum debt and amt credit sum limit have negative values. These negative values are noted and accepted as the negative values represent the past data from the date of application.

# Filling the null values with mean of their respective columns

bureau['DAYS_CREDIT_ENDDATE'].fillna(bureau['DAYS_CREDIT_ENDDATE'].mean(), inplace = True)

bureau['DAYS_ENDDATE_FACT'].fillna(bureau['DAYS_ENDDATE_FACT'].mean(), inplace = True)

bureau['AMT_CREDIT_SUM_DEBT'].fillna(bureau['AMT_CREDIT_SUM_DEBT'].mean(), inplace = True)

bureau['AMT_CREDIT_SUM_LIMIT'].fillna(bureau['AMT_CREDIT_SUM_LIMIT'].mean(), inplace = True)

bureau['AMT_CREDIT_SUM'].fillna(bureau['AMT_CREDIT_SUM'].mean(), inplace = True)

# checking to see if all the null values are filled

bureau.isnull().sum()

SK_ID_CURR 0

SK_ID_BUREAU 0

CREDIT_ACTIVE 0

CREDIT_CURRENCY 0

DAYS_CREDIT 0

CREDIT_DAY_OVERDUE 0

DAYS_CREDIT_ENDDATE 0

DAYS_ENDDATE_FACT 0

CNT_CREDIT_PROLONG 0

AMT_CREDIT_SUM 0

AMT_CREDIT_SUM_DEBT 0

AMT_CREDIT_SUM_LIMIT 0

AMT_CREDIT_SUM_OVERDUE 0

CREDIT_TYPE 0

DAYS_CREDIT_UPDATE 0

dtype: int64

The bureau data set is now clean with no missing values and ready to be merged with the other datasets.

STEP 2: Loading and preparing the bureau_balance data set

bureau_bal = pd.read_csv('/content/grive/MyDrive/HomeLoanDefault/bureau_balance.csv')

bureau_bal.head()

| SK_ID_BUREAU | MONTHS_BALANCE | STATUS | |

|---|---|---|---|

| 0 | 5715448 | 0 | C |

| 1 | 5715448 | -1 | C |

| 2 | 5715448 | -2 | C |

| 3 | 5715448 | -3 | C |

| 4 | 5715448 | -4 | C |

bureau_bal.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 27299925 entries, 0 to 27299924

Data columns (total 3 columns):

# Column Dtype

--- ------ -----

0 SK_ID_BUREAU int64

1 MONTHS_BALANCE int64

2 STATUS object

dtypes: int64(2), object(1)

memory usage: 624.8+ MB

# checking for missing values

bureau_bal.isnull().sum()

SK_ID_BUREAU 0

MONTHS_BALANCE 0

STATUS 0

dtype: int64

bureau_bal.describe()

| SK_ID_BUREAU | MONTHS_BALANCE | |

|---|---|---|

| count | 27299925.000000 | 27299925.000000 |

| mean | 6036297.332974 | -30.741687 |

| std | 492348.856904 | 23.864509 |

| min | 5001709.000000 | -96.000000 |

| 25% | 5730933.000000 | -46.000000 |

| 50% | 6070821.000000 | -25.000000 |

| 75% | 6431951.000000 | -11.000000 |

| max | 6842888.000000 | 0.000000 |

The MONTHS_BALANCE column has negative values but the team has chosen to leave the negative values as is because MONTHS_BALANCE describes the Month of balance relative to application date (-1 means the freshest balance date).

Description of the dataset:

SK_ID_BUREAU → Recoded ID of Credit Bureau credit (unique coding for each application) - use this to join to CREDIT_BUREAU table.

MONTHS_BALANCE → Month of balance relative to application date (-1 means the freshest balance date).

STATUS → Status of Credit Bureau loan during the month (active, closed, DPD0-30,… [C means closed, X means status unknown, 0 means no DPD, 1 means maximal did during month between 1-30, 2 means DPD 31-60,… 5 means DPD 120+ or sold or written off ]).

# Checking the no. of unique SK_ID_BUREAU values

countbur = bureau_bal["SK_ID_BUREAU"].unique()

countbur.shape

(817395,)

For each unique SK_ID_BUREAU there are duplicate rows that provide the data for multiple dates so we need to keep only that row that has the most recent information and drop the old information. In this dataset we will keep only those rows that have the most recent information about the MONTHS_BALANCE for each applicant relative to the application date (-1 means the freshest balance date) by retaining those rows that have the max value for months balance (given the negative values, max operator will give the most recent info) and delete the other rows for each unique SK_ID_BUREAU.

bureau_bal = bureau_bal.groupby('SK_ID_BUREAU', group_keys=False).apply(lambda x: x.loc[x.MONTHS_BALANCE.idxmax()])

bureau_bal.shape

(817395, 3)

bureau_bal['index'] = bureau_bal.index

| SK_ID_CURR | SK_ID_BUREAU | CREDIT_ACTIVE | CREDIT_CURRENCY | DAYS_CREDIT | CREDIT_DAY_OVERDUE | DAYS_CREDIT_ENDDATE | DAYS_ENDDATE_FACT | CNT_CREDIT_PROLONG | AMT_CREDIT_SUM | AMT_CREDIT_SUM_DEBT | AMT_CREDIT_SUM_LIMIT | AMT_CREDIT_SUM_OVERDUE | CREDIT_TYPE | DAYS_CREDIT_UPDATE | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 215354 | 5714462 | Closed | currency 1 | -497 | 0 | -153.000000 | -153.000000 | 0 | 91323.000000 | 0.000000 | 6229.514980 | 0.000000 | Consumer credit | -131 |

| 1 | 215354 | 5714463 | Active | currency 1 | -208 | 0 | 1075.000000 | -1017.437148 | 0 | 225000.000000 | 171342.000000 | 6229.514980 | 0.000000 | Credit card | -20 |

| 2 | 215354 | 5714464 | Active | currency 1 | -203 | 0 | 528.000000 | -1017.437148 | 0 | 464323.500000 | 137085.119952 | 6229.514980 | 0.000000 | Consumer credit | -16 |

| 3 | 215354 | 5714465 | Active | currency 1 | -203 | 0 | 510.517362 | -1017.437148 | 0 | 90000.000000 | 137085.119952 | 6229.514980 | 0.000000 | Credit card | -16 |

| 4 | 215354 | 5714466 | Active | currency 1 | -629 | 0 | 1197.000000 | -1017.437148 | 0 | 2700000.000000 | 137085.119952 | 6229.514980 | 0.000000 | Consumer credit | -21 |

bureau_bal.index.name = None

bureau_bal.head()

| SK_ID_BUREAU | MONTHS_BALANCE | STATUS | index | |

|---|---|---|---|---|

| 5001709 | 5001709 | 0 | C | 5001709 |

| 5001710 | 5001710 | 0 | C | 5001710 |

| 5001711 | 5001711 | 0 | X | 5001711 |

| 5001712 | 5001712 | 0 | C | 5001712 |

| 5001713 | 5001713 | 0 | X | 5001713 |

The bureau_balance data set is now clean with no missing values and duplicates and is now ready to be merged with the other datasets.

STEP 3: Merging the bureau and the bureau_bal data sets

# Left merge the two datasets

bureau_merged = pd.merge(bureau, bureau_bal, on='SK_ID_BUREAU', how='left')

print(bureau.shape, bureau_bal.shape, bureau_merged.shape)

(1716428, 15) (817395, 4) (1716428, 18)

# the above results show that there are duplicate rows for each SK_ID_CURR, we must keep only those rows that have the most recent info for applicant

# Checking the no. of unique SK_ID_CURR values

countmer = bureau_merged["SK_ID_CURR"].unique()

countmer.shape

(305811,)

# Keeping only those rows that have the most recent info from the application date and deleting old rows for each SK_ID_CURR

bureau_merged = bureau_merged.groupby('SK_ID_CURR', group_keys=False).apply(lambda x: x.loc[x.DAYS_CREDIT.idxmax()])

bureau_merged.shape

(305811, 18)

# Dropping SK_ID_BUREAU column as it is no longer needed for further merging of the datasets

bureau_merged.drop(['SK_ID_BUREAU'], axis = 1, inplace = True)

bureau_merged.shape

(305811, 17)

#Export the file

bureau_merged.to_csv('bureau_merged.csv')

from google.colab import files

files.download("bureau_merged.csv")

<IPython.core.display.Javascript object>

<IPython.core.display.Javascript object>

import numpy as np

%matplotlib inline

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings("ignore")

pd.set_option('display.max_columns',999) #set column display number

pd.set_option('display.max_rows',200) #set row display number

pd.set_option('float_format', '{:f}'.format) #set float format

from google.colab import drive

drive.mount('/content/grive')

Mounted at /content/grive

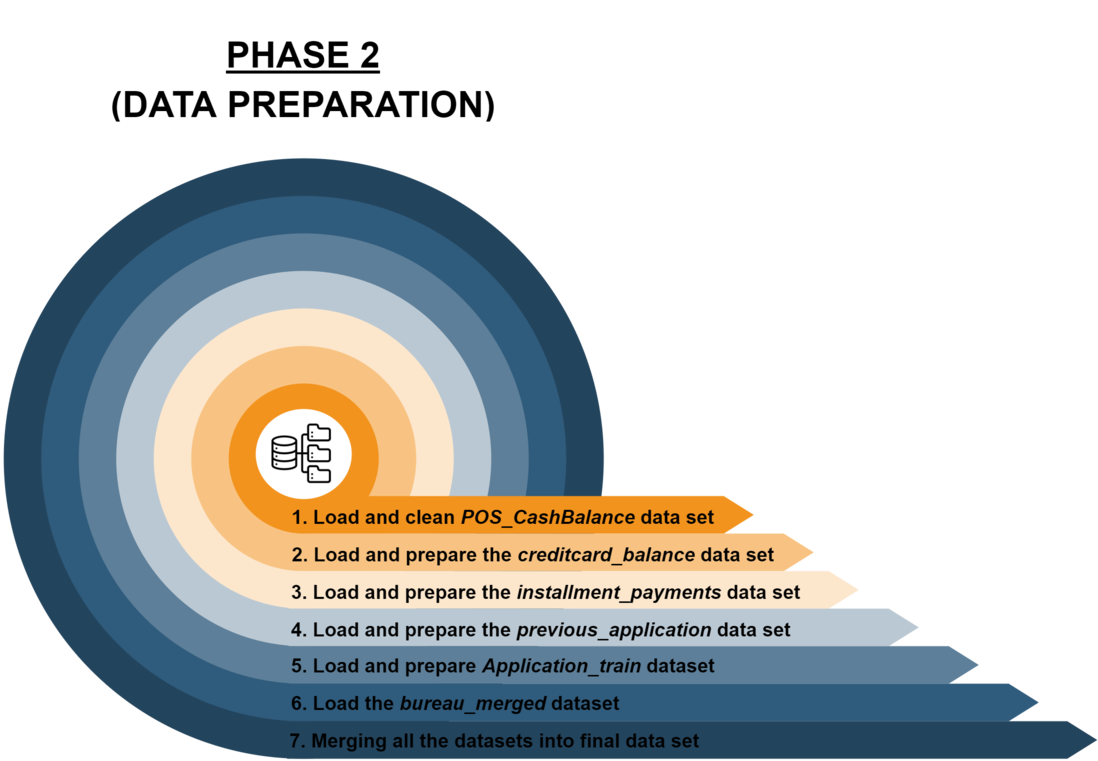

STEP 1: Loading and cleaning POS_CashBalance data set

# Loading the dataset

POS_cashBal = pd.read_csv('/content/grive/MyDrive/HomeLoanDefault/POS_CASH_balance.csv')

POS_cashBal.head()

| SK_ID_PREV | SK_ID_CURR | MONTHS_BALANCE | CNT_INSTALMENT | CNT_INSTALMENT_FUTURE | NAME_CONTRACT_STATUS | SK_DPD | SK_DPD_DEF | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1803195 | 182943 | -31 | 48.000000 | 45.000000 | Active | 0 | 0 |

| 1 | 1715348 | 367990 | -33 | 36.000000 | 35.000000 | Active | 0 | 0 |

| 2 | 1784872 | 397406 | -32 | 12.000000 | 9.000000 | Active | 0 | 0 |

| 3 | 1903291 | 269225 | -35 | 48.000000 | 42.000000 | Active | 0 | 0 |

| 4 | 2341044 | 334279 | -35 | 36.000000 | 35.000000 | Active | 0 | 0 |

POS_cashBal.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 10001358 entries, 0 to 10001357

Data columns (total 8 columns):

# Column Dtype

--- ------ -----

0 SK_ID_PREV int64

1 SK_ID_CURR int64

2 MONTHS_BALANCE int64

3 CNT_INSTALMENT float64

4 CNT_INSTALMENT_FUTURE float64

5 NAME_CONTRACT_STATUS object

6 SK_DPD int64

7 SK_DPD_DEF int64

dtypes: float64(2), int64(5), object(1)

memory usage: 610.4+ MB

Description of The Dataset:

SK_ID_PREV → ID of previous credit in Home Credit related to loan in our sample. (One loan in our sample can have 0,1,2 or more previous loans in Home Credit).

SK_ID_CURR → ID of loan in our sample.

MONTHS_BALANCE → Month of balance relative to application date (-1 means the freshest balance date).

CNT_INSTALMENT → Term of previous credit (can change over time).

CNT_INSTALMENT_FUTURE → Installments left to pay on the previous credit.

NAME_CONTRACT_STATUS → Contract status during the month.

SK_DPD → DPD (days past due) during the month of previous credit.

SK_DPD_DEF → DPD during the month with tolerance (debts with low loan amounts are ignored) of the previous credit.

# checking for the null values in the columns

POS_cashBal.isnull().sum()

SK_ID_PREV 0

SK_ID_CURR 0

MONTHS_BALANCE 0

CNT_INSTALMENT 26071

CNT_INSTALMENT_FUTURE 26087

NAME_CONTRACT_STATUS 0

SK_DPD 0

SK_DPD_DEF 0

dtype: int64

# checking to see if there are any negative values

POS_cashBal.describe().T

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| SK_ID_PREV | 10001358.000000 | 1903216.598957 | 535846.530722 | 1000001.000000 | 1434405.000000 | 1896565.000000 | 2368963.000000 | 2843499.000000 |

| SK_ID_CURR | 10001358.000000 | 278403.863306 | 102763.745090 | 100001.000000 | 189550.000000 | 278654.000000 | 367429.000000 | 456255.000000 |

| MONTHS_BALANCE | 10001358.000000 | -35.012588 | 26.066570 | -96.000000 | -54.000000 | -28.000000 | -13.000000 | -1.000000 |

| CNT_INSTALMENT | 9975287.000000 | 17.089650 | 11.995056 | 1.000000 | 10.000000 | 12.000000 | 24.000000 | 92.000000 |

| CNT_INSTALMENT_FUTURE | 9975271.000000 | 10.483840 | 11.109058 | 0.000000 | 3.000000 | 7.000000 | 14.000000 | 85.000000 |

| SK_DPD | 10001358.000000 | 11.606928 | 132.714043 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 4231.000000 |

| SK_DPD_DEF | 10001358.000000 | 0.654468 | 32.762491 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 3595.000000 |

MONTHS_BALANCE is the only column that has negative values. We have chosen to leave the negative signs as is, as it makes sense to have the negative values as it reflects the Month of balance relative to application date (-1 means the freshest balance date).

# Checking the % of missing values in each column

round(100*(POS_cashBal.isnull().sum()/len(POS_cashBal.index)),2)

SK_ID_PREV 0.000000

SK_ID_CURR 0.000000

MONTHS_BALANCE 0.000000

CNT_INSTALMENT 0.260000

CNT_INSTALMENT_FUTURE 0.260000

NAME_CONTRACT_STATUS 0.000000

SK_DPD 0.000000

SK_DPD_DEF 0.000000

dtype: float64

# Filling the missing values in the columns with means of the respective columns

POS_cashBal['CNT_INSTALMENT'].fillna(POS_cashBal['CNT_INSTALMENT'].mean(), inplace = True)

POS_cashBal['CNT_INSTALMENT_FUTURE'].fillna(POS_cashBal['CNT_INSTALMENT_FUTURE'].mean(), inplace = True)

# checking if there are any more null values

POS_cashBal.isnull().sum()

SK_ID_PREV 0

SK_ID_CURR 0

MONTHS_BALANCE 0

CNT_INSTALMENT 0

CNT_INSTALMENT_FUTURE 0

NAME_CONTRACT_STATUS 0

SK_DPD 0

SK_DPD_DEF 0

dtype: int64

#Checking the no. of unique SK_ID_CURR values

count = POS_cashBal["SK_ID_CURR"].unique()

count.shape

(337252,)

For each unique SK_ID_CURR there are duplicate rows that provide the data for the applicant on multiple dates so we need to keep only that row that has the most recent information and drop the old information. In this dataset we will keep only those rows that have the max MONTHS_BALANCE (least negative values as to the latest Month of balance relative to application date (-1 means the freshest balance date)) and delete the other rows for each unique SK_ID_CURR.

POS_cashBal = POS_cashBal.groupby('SK_ID_CURR', group_keys=False).apply(lambda x: x.loc[x.MONTHS_BALANCE.idxmax()])

POS_cashBal.shape

(337252, 8)

POS_cashBal['index'] = POS_cashBal.index

POS_cashBal.index.name = None

POS_cashBal.drop(['SK_ID_PREV', 'index'], axis = 1, inplace = True)

POS_cashBal.head()

| SK_ID_CURR | MONTHS_BALANCE | CNT_INSTALMENT | CNT_INSTALMENT_FUTURE | NAME_CONTRACT_STATUS | SK_DPD | SK_DPD_DEF | |

|---|---|---|---|---|---|---|---|

| 100001 | 100001 | -53 | 4.000000 | 0.000000 | Completed | 0 | 0 |

| 100002 | 100002 | -1 | 24.000000 | 6.000000 | Active | 0 | 0 |

| 100003 | 100003 | -18 | 7.000000 | 0.000000 | Completed | 0 | 0 |

| 100004 | 100004 | -24 | 3.000000 | 0.000000 | Completed | 0 | 0 |

| 100005 | 100005 | -15 | 9.000000 | 0.000000 | Completed | 0 | 0 |

The POSitive_cash_balance data set is now clean with no missing values and duplicates and is now ready to be merged with the other datasets.

STEP 2: Loading and preparing the creditcard_balance data set

creditcard_bal = pd.read_csv('/content/grive/MyDrive/HomeLoanDefault/credit_card_balance.csv')

creditcard_bal.head()

| SK_ID_PREV | SK_ID_CURR | MONTHS_BALANCE | AMT_BALANCE | AMT_CREDIT_LIMIT_ACTUAL | AMT_DRAWINGS_ATM_CURRENT | AMT_DRAWINGS_CURRENT | AMT_DRAWINGS_OTHER_CURRENT | AMT_DRAWINGS_POS_CURRENT | AMT_INST_MIN_REGULARITY | AMT_PAYMENT_CURRENT | AMT_PAYMENT_TOTAL_CURRENT | AMT_RECEIVABLE_PRINCIPAL | AMT_RECIVABLE | AMT_TOTAL_RECEIVABLE | CNT_DRAWINGS_ATM_CURRENT | CNT_DRAWINGS_CURRENT | CNT_DRAWINGS_OTHER_CURRENT | CNT_DRAWINGS_POS_CURRENT | CNT_INSTALMENT_MATURE_CUM | NAME_CONTRACT_STATUS | SK_DPD | SK_DPD_DEF | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2562384 | 378907 | -6 | 56.970000 | 135000 | 0.000000 | 877.500000 | 0.000000 | 877.500000 | 1700.325000 | 1800.000000 | 1800.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 1 | 0.000000 | 1.000000 | 35.000000 | Active | 0 | 0 |

| 1 | 2582071 | 363914 | -1 | 63975.555000 | 45000 | 2250.000000 | 2250.000000 | 0.000000 | 0.000000 | 2250.000000 | 2250.000000 | 2250.000000 | 60175.080000 | 64875.555000 | 64875.555000 | 1.000000 | 1 | 0.000000 | 0.000000 | 69.000000 | Active | 0 | 0 |

| 2 | 1740877 | 371185 | -7 | 31815.225000 | 450000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 2250.000000 | 2250.000000 | 2250.000000 | 26926.425000 | 31460.085000 | 31460.085000 | 0.000000 | 0 | 0.000000 | 0.000000 | 30.000000 | Active | 0 | 0 |

| 3 | 1389973 | 337855 | -4 | 236572.110000 | 225000 | 2250.000000 | 2250.000000 | 0.000000 | 0.000000 | 11795.760000 | 11925.000000 | 11925.000000 | 224949.285000 | 233048.970000 | 233048.970000 | 1.000000 | 1 | 0.000000 | 0.000000 | 10.000000 | Active | 0 | 0 |

| 4 | 1891521 | 126868 | -1 | 453919.455000 | 450000 | 0.000000 | 11547.000000 | 0.000000 | 11547.000000 | 22924.890000 | 27000.000000 | 27000.000000 | 443044.395000 | 453919.455000 | 453919.455000 | 0.000000 | 1 | 0.000000 | 1.000000 | 101.000000 | Active | 0 | 0 |

creditcard_bal.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 3840312 entries, 0 to 3840311

Data columns (total 23 columns):

# Column Dtype

--- ------ -----

0 SK_ID_PREV int64

1 SK_ID_CURR int64

2 MONTHS_BALANCE int64

3 AMT_BALANCE float64

4 AMT_CREDIT_LIMIT_ACTUAL int64

5 AMT_DRAWINGS_ATM_CURRENT float64

6 AMT_DRAWINGS_CURRENT float64

7 AMT_DRAWINGS_OTHER_CURRENT float64

8 AMT_DRAWINGS_POS_CURRENT float64

9 AMT_INST_MIN_REGULARITY float64

10 AMT_PAYMENT_CURRENT float64

11 AMT_PAYMENT_TOTAL_CURRENT float64

12 AMT_RECEIVABLE_PRINCIPAL float64

13 AMT_RECIVABLE float64

14 AMT_TOTAL_RECEIVABLE float64

15 CNT_DRAWINGS_ATM_CURRENT float64

16 CNT_DRAWINGS_CURRENT int64

17 CNT_DRAWINGS_OTHER_CURRENT float64

18 CNT_DRAWINGS_POS_CURRENT float64

19 CNT_INSTALMENT_MATURE_CUM float64

20 NAME_CONTRACT_STATUS object

21 SK_DPD int64

22 SK_DPD_DEF int64

dtypes: float64(15), int64(7), object(1)

memory usage: 673.9+ MB

# checking for missing values in the columns

creditcard_bal.isnull().sum()

SK_ID_PREV 0

SK_ID_CURR 0

MONTHS_BALANCE 0

AMT_BALANCE 0

AMT_CREDIT_LIMIT_ACTUAL 0

AMT_DRAWINGS_ATM_CURRENT 749816

AMT_DRAWINGS_CURRENT 0

AMT_DRAWINGS_OTHER_CURRENT 749816

AMT_DRAWINGS_POS_CURRENT 749816

AMT_INST_MIN_REGULARITY 305236

AMT_PAYMENT_CURRENT 767988

AMT_PAYMENT_TOTAL_CURRENT 0

AMT_RECEIVABLE_PRINCIPAL 0

AMT_RECIVABLE 0

AMT_TOTAL_RECEIVABLE 0

CNT_DRAWINGS_ATM_CURRENT 749816

CNT_DRAWINGS_CURRENT 0

CNT_DRAWINGS_OTHER_CURRENT 749816

CNT_DRAWINGS_POS_CURRENT 749816

CNT_INSTALMENT_MATURE_CUM 305236

NAME_CONTRACT_STATUS 0

SK_DPD 0

SK_DPD_DEF 0

dtype: int64

# Finding the % of missing values in the columns

round(100*(creditcard_bal.isnull().sum()/len(creditcard_bal.index)),2)

SK_ID_PREV 0.000000

SK_ID_CURR 0.000000

MONTHS_BALANCE 0.000000

AMT_BALANCE 0.000000

AMT_CREDIT_LIMIT_ACTUAL 0.000000

AMT_DRAWINGS_ATM_CURRENT 19.520000

AMT_DRAWINGS_CURRENT 0.000000

AMT_DRAWINGS_OTHER_CURRENT 19.520000

AMT_DRAWINGS_POS_CURRENT 19.520000

AMT_INST_MIN_REGULARITY 7.950000

AMT_PAYMENT_CURRENT 20.000000

AMT_PAYMENT_TOTAL_CURRENT 0.000000

AMT_RECEIVABLE_PRINCIPAL 0.000000

AMT_RECIVABLE 0.000000

AMT_TOTAL_RECEIVABLE 0.000000

CNT_DRAWINGS_ATM_CURRENT 19.520000

CNT_DRAWINGS_CURRENT 0.000000

CNT_DRAWINGS_OTHER_CURRENT 19.520000

CNT_DRAWINGS_POS_CURRENT 19.520000

CNT_INSTALMENT_MATURE_CUM 7.950000

NAME_CONTRACT_STATUS 0.000000

SK_DPD 0.000000

SK_DPD_DEF 0.000000

dtype: float64

creditcard_bal.describe().T

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| SK_ID_PREV | 3840312.000000 | 1904503.589900 | 536469.470563 | 1000018.000000 | 1434385.000000 | 1897122.000000 | 2369327.750000 | 2843496.000000 |

| SK_ID_CURR | 3840312.000000 | 278324.207289 | 102704.475133 | 100006.000000 | 189517.000000 | 278396.000000 | 367580.000000 | 456250.000000 |

| MONTHS_BALANCE | 3840312.000000 | -34.521921 | 26.667751 | -96.000000 | -55.000000 | -28.000000 | -11.000000 | -1.000000 |

| AMT_BALANCE | 3840312.000000 | 58300.155262 | 106307.031025 | -420250.185000 | 0.000000 | 0.000000 | 89046.686250 | 1505902.185000 |

| AMT_CREDIT_LIMIT_ACTUAL | 3840312.000000 | 153807.957400 | 165145.699523 | 0.000000 | 45000.000000 | 112500.000000 | 180000.000000 | 1350000.000000 |

| AMT_DRAWINGS_ATM_CURRENT | 3090496.000000 | 5961.324822 | 28225.688579 | -6827.310000 | 0.000000 | 0.000000 | 0.000000 | 2115000.000000 |

| AMT_DRAWINGS_CURRENT | 3840312.000000 | 7433.388179 | 33846.077334 | -6211.620000 | 0.000000 | 0.000000 | 0.000000 | 2287098.315000 |

| AMT_DRAWINGS_OTHER_CURRENT | 3090496.000000 | 288.169582 | 8201.989345 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 1529847.000000 |

| AMT_DRAWINGS_POS_CURRENT | 3090496.000000 | 2968.804848 | 20796.887047 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 2239274.160000 |

| AMT_INST_MIN_REGULARITY | 3535076.000000 | 3540.204129 | 5600.154122 | 0.000000 | 0.000000 | 0.000000 | 6633.911250 | 202882.005000 |

| AMT_PAYMENT_CURRENT | 3072324.000000 | 10280.537702 | 36078.084953 | 0.000000 | 152.370000 | 2702.700000 | 9000.000000 | 4289207.445000 |

| AMT_PAYMENT_TOTAL_CURRENT | 3840312.000000 | 7588.856739 | 32005.987768 | 0.000000 | 0.000000 | 0.000000 | 6750.000000 | 4278315.690000 |

| AMT_RECEIVABLE_PRINCIPAL | 3840312.000000 | 55965.876905 | 102533.616843 | -423305.820000 | 0.000000 | 0.000000 | 85359.240000 | 1472316.795000 |

| AMT_RECIVABLE | 3840312.000000 | 58088.811177 | 105965.369908 | -420250.185000 | 0.000000 | 0.000000 | 88899.491250 | 1493338.185000 |

| AMT_TOTAL_RECEIVABLE | 3840312.000000 | 58098.285489 | 105971.801103 | -420250.185000 | 0.000000 | 0.000000 | 88914.510000 | 1493338.185000 |

| CNT_DRAWINGS_ATM_CURRENT | 3090496.000000 | 0.309449 | 1.100401 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 51.000000 |

| CNT_DRAWINGS_CURRENT | 3840312.000000 | 0.703144 | 3.190347 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 165.000000 |

| CNT_DRAWINGS_OTHER_CURRENT | 3090496.000000 | 0.004812 | 0.082639 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 12.000000 |

| CNT_DRAWINGS_POS_CURRENT | 3090496.000000 | 0.559479 | 3.240649 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 165.000000 |

| CNT_INSTALMENT_MATURE_CUM | 3535076.000000 | 20.825084 | 20.051494 | 0.000000 | 4.000000 | 15.000000 | 32.000000 | 120.000000 |

| SK_DPD | 3840312.000000 | 9.283667 | 97.515700 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 3260.000000 |

| SK_DPD_DEF | 3840312.000000 | 0.331622 | 21.479231 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 3260.000000 |

MONTHS_BALANCE, AMT_BALANCE, AMT_DRAWINGS_ATM_CURRENT, AMT_DRAWINGS_CURRENT, AMT_RECEIVABLE_PRINCIPAL, AMT_RECIVABLE, AMT_TOTAL_RECEIVABLE columns have negative values.

Description of The Dataset:

SK_ID_PREV ID of previous credit in Home Credit related to loan in our sample. (One loan in our sample can have 0,1,2 or more previous loans in Home Credit).

SK_ID_CURR → ID of loan in our sample.

MONTHS_BALANCE → Month of balance relative to application date (-1 means the freshest balance date).

AMT_BALANCE → Balance during the month of previous credit.

AMT_CREDIT_LIMIT_ACTUAL → Credit card limit during the month of the previous credit.

AMT_DRAWINGS_ATM_CURRENT → Amount drawing at ATM during the month of the previous credit.

AMT_DRAWINGS_CURRENT → Amount drawing during the month of the previous credit.

AMT_DRAWINGS_OTHER_CURRENT → Amount of other drawings during the month of the previous credit.

AMT_DRAWINGS_POS_CURRENT → Amount drawing or buying goods during the month of the previous credit.

AMT_INST_MIN_REGULARITY → Minimal installment for this month of the previous credit.

AMT_PAYMENT_CURRENT → How much did the client pay during the month on the previous credit.

AMT_PAYMENT_TOTAL_CURRENT → How much did the client pay during the month in total on the previous credit.

AMT_RECEIVABLE_PRINCIPAL → Amount receivable for principal on the previous credit.

AMT_RECIVABLE → Amount receivable on the previous credit.

AMT_TOTAL_RECEIVABLE → Total amount receivable on the previous credit.

CNT_DRAWINGS_ATM_CURRENT → Number of drawings at ATM during this month on the previous credit.

CNT_DRAWINGS_CURRENT → Number of drawings during this month on the previous credit.

CNT_DRAWINGS_OTHER_CURRENT → Number of other drawings during this month on the previous credit.

CNT_DRAWINGS_POS_CURRENT → Number of drawings for goods during this month on the previous credit.

CNT_INSTALMENT_MATURE_CUM → Number of paid installments on the previous credit.

NAME_CONTRACT_STATUS → Contract status during the month.

SK_DPD → DPD (days past due) during the month of previous credit.

SK_DPD_DEF → DPD during the month with tolerance (debts with low loan amounts are ignored) of the previous credit.

# Replacing the missing values with the means of each column

creditcard_bal['AMT_DRAWINGS_ATM_CURRENT'].fillna(creditcard_bal['AMT_DRAWINGS_ATM_CURRENT'].mean(), inplace = True)

creditcard_bal['AMT_DRAWINGS_OTHER_CURRENT'].fillna(creditcard_bal['AMT_DRAWINGS_OTHER_CURRENT'].mean(), inplace = True)

creditcard_bal['AMT_DRAWINGS_POS_CURRENT'].fillna(creditcard_bal['AMT_DRAWINGS_POS_CURRENT'].mean(), inplace = True)

creditcard_bal['AMT_INST_MIN_REGULARITY'].fillna(creditcard_bal['AMT_INST_MIN_REGULARITY'].mean(), inplace = True)

creditcard_bal['AMT_PAYMENT_CURRENT'].fillna(creditcard_bal['AMT_PAYMENT_CURRENT'].mean(), inplace = True)

creditcard_bal['CNT_DRAWINGS_ATM_CURRENT'].fillna(creditcard_bal['CNT_DRAWINGS_ATM_CURRENT'].mean(), inplace = True)

creditcard_bal['CNT_DRAWINGS_OTHER_CURRENT'].fillna(creditcard_bal['CNT_DRAWINGS_OTHER_CURRENT'].mean(), inplace = True)

creditcard_bal['CNT_DRAWINGS_POS_CURRENT'].fillna(creditcard_bal['CNT_DRAWINGS_POS_CURRENT'].mean(), inplace = True)

creditcard_bal['CNT_INSTALMENT_MATURE_CUM'].fillna(creditcard_bal['CNT_INSTALMENT_MATURE_CUM'].mean(), inplace = True)

# checking for missing values in the columns

creditcard_bal.isnull().sum()

SK_ID_PREV 0

SK_ID_CURR 0

MONTHS_BALANCE 0

AMT_BALANCE 0

AMT_CREDIT_LIMIT_ACTUAL 0

AMT_DRAWINGS_ATM_CURRENT 0

AMT_DRAWINGS_CURRENT 0

AMT_DRAWINGS_OTHER_CURRENT 0

AMT_DRAWINGS_POS_CURRENT 0

AMT_INST_MIN_REGULARITY 0

AMT_PAYMENT_CURRENT 0

AMT_PAYMENT_TOTAL_CURRENT 0

AMT_RECEIVABLE_PRINCIPAL 0

AMT_RECIVABLE 0

AMT_TOTAL_RECEIVABLE 0

CNT_DRAWINGS_ATM_CURRENT 0

CNT_DRAWINGS_CURRENT 0

CNT_DRAWINGS_OTHER_CURRENT 0

CNT_DRAWINGS_POS_CURRENT 0

CNT_INSTALMENT_MATURE_CUM 0

NAME_CONTRACT_STATUS 0

SK_DPD 0

SK_DPD_DEF 0

dtype: int64

#Checking the no. of unique SK_ID_CURR values

count1 = creditcard_bal["SK_ID_CURR"].unique()

count1.shape

(103558,)

For each unique SK_ID_CURR there are duplicate rows that provide the data for multiple dates so we need to keep only that row that has the most recent information and drop the old information. In this dataset we will keep only those rows that have the max MONTHS_BALANCE (least negative values as to the latest Month of balance relative to application date (-1 means the freshest balance date)) and delete the other rows for each unique SK_ID_CURR.

creditcard_bal = creditcard_bal.groupby('SK_ID_CURR', group_keys=False).apply(lambda x: x.loc[x.MONTHS_BALANCE.idxmax()])

creditcard_bal.shape

(103558, 23)

creditcard_bal['index'] = creditcard_bal.index

creditcard_bal.index.name = None

creditcard_bal.drop(['SK_ID_PREV', 'index'], axis = 1, inplace = True)

creditcard_bal.head()

| SK_ID_CURR | MONTHS_BALANCE | AMT_BALANCE | AMT_CREDIT_LIMIT_ACTUAL | AMT_DRAWINGS_ATM_CURRENT | AMT_DRAWINGS_CURRENT | AMT_DRAWINGS_OTHER_CURRENT | AMT_DRAWINGS_POS_CURRENT | AMT_INST_MIN_REGULARITY | AMT_PAYMENT_CURRENT | AMT_PAYMENT_TOTAL_CURRENT | AMT_RECEIVABLE_PRINCIPAL | AMT_RECIVABLE | AMT_TOTAL_RECEIVABLE | CNT_DRAWINGS_ATM_CURRENT | CNT_DRAWINGS_CURRENT | CNT_DRAWINGS_OTHER_CURRENT | CNT_DRAWINGS_POS_CURRENT | CNT_INSTALMENT_MATURE_CUM | NAME_CONTRACT_STATUS | SK_DPD | SK_DPD_DEF | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 100006 | 100006 | -1 | 0.000000 | 270000 | 5961.324822 | 0.000000 | 288.169582 | 2968.804848 | 0.000000 | 10280.537702 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.309449 | 0 | 0.004812 | 0.559479 | 0.000000 | Active | 0 | 0 |

| 100011 | 100011 | -2 | 0.000000 | 90000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 563.355000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0 | 0.000000 | 0.000000 | 33.000000 | Active | 0 | 0 |

| 100013 | 100013 | -1 | 0.000000 | 45000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 274.320000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0 | 0.000000 | 0.000000 | 22.000000 | Active | 0 | 0 |

| 100021 | 100021 | -2 | 0.000000 | 675000 | 5961.324822 | 0.000000 | 288.169582 | 2968.804848 | 0.000000 | 10280.537702 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.309449 | 0 | 0.004812 | 0.559479 | 0.000000 | Completed | 0 | 0 |

| 100023 | 100023 | -4 | 0.000000 | 225000 | 5961.324822 | 0.000000 | 288.169582 | 2968.804848 | 0.000000 | 10280.537702 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.309449 | 0 | 0.004812 | 0.559479 | 0.000000 | Active | 0 | 0 |

The creditcard_balance data set is now clean with no missing values and duplicates and is ready to be merged with the other datasets.

STEP 3: Loading and preparing the installment_payments data set

instal_paymt = pd.read_csv('/content/grive/MyDrive/HomeLoanDefault/installments_payments.csv')

instal_paymt.head()

| SK_ID_PREV | SK_ID_CURR | NUM_INSTALMENT_VERSION | NUM_INSTALMENT_NUMBER | DAYS_INSTALMENT | DAYS_ENTRY_PAYMENT | AMT_INSTALMENT | AMT_PAYMENT | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1054186 | 161674 | 1.000000 | 6 | -1180.000000 | -1187.000000 | 6948.360000 | 6948.360000 |

| 1 | 1330831 | 151639 | 0.000000 | 34 | -2156.000000 | -2156.000000 | 1716.525000 | 1716.525000 |

| 2 | 2085231 | 193053 | 2.000000 | 1 | -63.000000 | -63.000000 | 25425.000000 | 25425.000000 |

| 3 | 2452527 | 199697 | 1.000000 | 3 | -2418.000000 | -2426.000000 | 24350.130000 | 24350.130000 |

| 4 | 2714724 | 167756 | 1.000000 | 2 | -1383.000000 | -1366.000000 | 2165.040000 | 2160.585000 |

instal_paymt.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 13605401 entries, 0 to 13605400

Data columns (total 8 columns):

# Column Dtype

--- ------ -----

0 SK_ID_PREV int64

1 SK_ID_CURR int64

2 NUM_INSTALMENT_VERSION float64

3 NUM_INSTALMENT_NUMBER int64

4 DAYS_INSTALMENT float64

5 DAYS_ENTRY_PAYMENT float64

6 AMT_INSTALMENT float64

7 AMT_PAYMENT float64

dtypes: float64(5), int64(3)

memory usage: 830.4 MB

# checking for missing values in each column

instal_paymt.isnull().sum()

SK_ID_PREV 0

SK_ID_CURR 0

NUM_INSTALMENT_VERSION 0

NUM_INSTALMENT_NUMBER 0

DAYS_INSTALMENT 0

DAYS_ENTRY_PAYMENT 2905

AMT_INSTALMENT 0

AMT_PAYMENT 2905

dtype: int64

instal_paymt.describe().T

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| SK_ID_PREV | 13605401.000000 | 1903364.969549 | 536202.905546 | 1000001.000000 | 1434191.000000 | 1896520.000000 | 2369094.000000 | 2843499.000000 |

| SK_ID_CURR | 13605401.000000 | 278444.881738 | 102718.310411 | 100001.000000 | 189639.000000 | 278685.000000 | 367530.000000 | 456255.000000 |

| NUM_INSTALMENT_VERSION | 13605401.000000 | 0.856637 | 1.035216 | 0.000000 | 0.000000 | 1.000000 | 1.000000 | 178.000000 |

| NUM_INSTALMENT_NUMBER | 13605401.000000 | 18.870896 | 26.664067 | 1.000000 | 4.000000 | 8.000000 | 19.000000 | 277.000000 |

| DAYS_INSTALMENT | 13605401.000000 | -1042.269992 | 800.946284 | -2922.000000 | -1654.000000 | -818.000000 | -361.000000 | -1.000000 |

| DAYS_ENTRY_PAYMENT | 13602496.000000 | -1051.113684 | 800.585883 | -4921.000000 | -1662.000000 | -827.000000 | -370.000000 | -1.000000 |

| AMT_INSTALMENT | 13605401.000000 | 17050.906989 | 50570.254429 | 0.000000 | 4226.085000 | 8884.080000 | 16710.210000 | 3771487.845000 |

| AMT_PAYMENT | 13602496.000000 | 17238.223250 | 54735.783981 | 0.000000 | 3398.265000 | 8125.515000 | 16108.425000 | 3771487.845000 |

DAYS_INSTALMENT, DAYS_ENTRY_PAYMENT has negative values.

Description of The Dataset:

SK_ID_PREV → ID of previous credit in Home Credit related to loan in our sample. (One loan in our sample can have 0,1,2 or more previous loans in Home Credit).

SK_ID_CURR → ID of loan in our sample.

NUM_INSTALMENT_VERSION → Version of installment calendar (0 is for credit card) of previous credit. Change of installment version from month to month signifies that some parameter of payment calendar has changed.

NUM_INSTALMENT_NUMBER → On which installment we observe payment.

DAYS_INSTALMENT → When the installment of previous credit was supposed to be paid (relative to application date of current loan).

DAYS_ENTRY_PAYMENT → When was the installments of previous credit paid actually (relative to application date of current loan).

AMT_INSTALMENT → What was the prescribed installment amount of previous credit on this installment.

AMT_PAYMENT → What the client actually paid on previous credit on this installment.

# Replacing the missing values with the means of each column

instal_paymt['DAYS_ENTRY_PAYMENT'].fillna(instal_paymt['DAYS_ENTRY_PAYMENT'].mean(), inplace = True)

instal_paymt['AMT_PAYMENT'].fillna(instal_paymt['AMT_PAYMENT'].mean(), inplace = True)

# checking for missing values in each column

instal_paymt.isnull().sum()

SK_ID_PREV 0

SK_ID_CURR 0

NUM_INSTALMENT_VERSION 0

NUM_INSTALMENT_NUMBER 0

DAYS_INSTALMENT 0

DAYS_ENTRY_PAYMENT 0

AMT_INSTALMENT 0

AMT_PAYMENT 0

dtype: int64

#Checking the no. of unique SK_ID_CURR values

count2 = instal_paymt["SK_ID_CURR"].unique()

count2.shape

(339587,)

For each unique SK_ID_CURR there are duplicate rows that provide the data for multiple dates so we need to keep only that row that has the most recent information and drop the old information. In this dataset we will keep only those rows that have the max DAYS_INSTALMENT When the installment of previous credit was supposed to be paid (relative to application date of current loan, -1 means closer to the application date)) and delete the other rows for each unique SK_ID_CURR.

instal_paymt = instal_paymt.groupby('SK_ID_CURR', group_keys=False).apply(lambda x: x.loc[x.DAYS_INSTALMENT.idxmax()])

instal_paymt.shape

(339587, 8)

instal_paymt['index'] = instal_paymt.index

instal_paymt.index.name = None

instal_paymt.drop(['SK_ID_PREV', 'index'], axis = 1, inplace = True)

instal_paymt.head()

| SK_ID_CURR | NUM_INSTALMENT_VERSION | NUM_INSTALMENT_NUMBER | DAYS_INSTALMENT | DAYS_ENTRY_PAYMENT | AMT_INSTALMENT | AMT_PAYMENT | |

|---|---|---|---|---|---|---|---|

| 100001 | 100001.000000 | 2.000000 | 4.000000 | -1619.000000 | -1628.000000 | 17397.900000 | 17397.900000 |

| 100002 | 100002.000000 | 2.000000 | 19.000000 | -25.000000 | -49.000000 | 53093.745000 | 53093.745000 |

| 100003 | 100003.000000 | 2.000000 | 7.000000 | -536.000000 | -544.000000 | 560835.360000 | 560835.360000 |

| 100004 | 100004.000000 | 2.000000 | 3.000000 | -724.000000 | -727.000000 | 10573.965000 | 10573.965000 |

| 100005 | 100005.000000 | 2.000000 | 9.000000 | -466.000000 | -470.000000 | 17656.245000 | 17656.245000 |

The installment_payments data set is now clean with no missing values and duplicates and is ready to be merged with the other datasets.

STEP 4: Loading and preparing the previous_application data set

prev_appln = pd.read_csv('/content/grive/MyDrive/HomeLoanDefault/previous_application.csv')

prev_appln.head()

| SK_ID_PREV | SK_ID_CURR | NAME_CONTRACT_TYPE | AMT_ANNUITY | AMT_APPLICATION | AMT_CREDIT | AMT_DOWN_PAYMENT | AMT_GOODS_PRICE | WEEKDAY_APPR_PROCESS_START | HOUR_APPR_PROCESS_START | FLAG_LAST_APPL_PER_CONTRACT | NFLAG_LAST_APPL_IN_DAY | RATE_DOWN_PAYMENT | RATE_INTEREST_PRIMARY | RATE_INTEREST_PRIVILEGED | NAME_CASH_LOAN_PURPOSE | NAME_CONTRACT_STATUS | DAYS_DECISION | NAME_PAYMENT_TYPE | CODE_REJECT_REASON | NAME_TYPE_SUITE | NAME_CLIENT_TYPE | NAME_GOODS_CATEGORY | NAME_PORTFOLIO | NAME_PRODUCT_TYPE | CHANNEL_TYPE | SELLERPLACE_AREA | NAME_SELLER_INDUSTRY | CNT_PAYMENT | NAME_YIELD_GROUP | PRODUCT_COMBINATION | DAYS_FIRST_DRAWING | DAYS_FIRST_DUE | DAYS_LAST_DUE_1ST_VERSION | DAYS_LAST_DUE | DAYS_TERMINATION | NFLAG_INSURED_ON_APPROVAL | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2030495 | 271877 | Consumer loans | 1730.430000 | 17145.000000 | 17145.000000 | 0.000000 | 17145.000000 | SATURDAY | 15 | Y | 1 | 0.000000 | 0.182832 | 0.867336 | XAP | Approved | -73 | Cash through the bank | XAP | NaN | Repeater | Mobile | POS | XNA | Country-wide | 35 | Connectivity | 12.000000 | middle | POS mobile with interest | 365243.000000 | -42.000000 | 300.000000 | -42.000000 | -37.000000 | 0.000000 |

| 1 | 2802425 | 108129 | Cash loans | 25188.615000 | 607500.000000 | 679671.000000 | nan | 607500.000000 | THURSDAY | 11 | Y | 1 | nan | nan | nan | XNA | Approved | -164 | XNA | XAP | Unaccompanied | Repeater | XNA | Cash | x-sell | Contact center | -1 | XNA | 36.000000 | low_action | Cash X-Sell: low | 365243.000000 | -134.000000 | 916.000000 | 365243.000000 | 365243.000000 | 1.000000 |

| 2 | 2523466 | 122040 | Cash loans | 15060.735000 | 112500.000000 | 136444.500000 | nan | 112500.000000 | TUESDAY | 11 | Y | 1 | nan | nan | nan | XNA | Approved | -301 | Cash through the bank | XAP | Spouse, partner | Repeater | XNA | Cash | x-sell | Credit and cash offices | -1 | XNA | 12.000000 | high | Cash X-Sell: high | 365243.000000 | -271.000000 | 59.000000 | 365243.000000 | 365243.000000 | 1.000000 |

| 3 | 2819243 | 176158 | Cash loans | 47041.335000 | 450000.000000 | 470790.000000 | nan | 450000.000000 | MONDAY | 7 | Y | 1 | nan | nan | nan | XNA | Approved | -512 | Cash through the bank | XAP | NaN | Repeater | XNA | Cash | x-sell | Credit and cash offices | -1 | XNA | 12.000000 | middle | Cash X-Sell: middle | 365243.000000 | -482.000000 | -152.000000 | -182.000000 | -177.000000 | 1.000000 |

| 4 | 1784265 | 202054 | Cash loans | 31924.395000 | 337500.000000 | 404055.000000 | nan | 337500.000000 | THURSDAY | 9 | Y | 1 | nan | nan | nan | Repairs | Refused | -781 | Cash through the bank | HC | NaN | Repeater | XNA | Cash | walk-in | Credit and cash offices | -1 | XNA | 24.000000 | high | Cash Street: high | nan | nan | nan | nan | nan | nan |

prev_appln.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1670214 entries, 0 to 1670213

Data columns (total 37 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 SK_ID_PREV 1670214 non-null int64

1 SK_ID_CURR 1670214 non-null int64

2 NAME_CONTRACT_TYPE 1670214 non-null object

3 AMT_ANNUITY 1297979 non-null float64

4 AMT_APPLICATION 1670214 non-null float64

5 AMT_CREDIT 1670213 non-null float64

6 AMT_DOWN_PAYMENT 774370 non-null float64

7 AMT_GOODS_PRICE 1284699 non-null float64

8 WEEKDAY_APPR_PROCESS_START 1670214 non-null object

9 HOUR_APPR_PROCESS_START 1670214 non-null int64

10 FLAG_LAST_APPL_PER_CONTRACT 1670214 non-null object

11 NFLAG_LAST_APPL_IN_DAY 1670214 non-null int64

12 RATE_DOWN_PAYMENT 774370 non-null float64

13 RATE_INTEREST_PRIMARY 5951 non-null float64

14 RATE_INTEREST_PRIVILEGED 5951 non-null float64

15 NAME_CASH_LOAN_PURPOSE 1670214 non-null object

16 NAME_CONTRACT_STATUS 1670214 non-null object

17 DAYS_DECISION 1670214 non-null int64

18 NAME_PAYMENT_TYPE 1670214 non-null object

19 CODE_REJECT_REASON 1670214 non-null object

20 NAME_TYPE_SUITE 849809 non-null object

21 NAME_CLIENT_TYPE 1670214 non-null object

22 NAME_GOODS_CATEGORY 1670214 non-null object

23 NAME_PORTFOLIO 1670214 non-null object

24 NAME_PRODUCT_TYPE 1670214 non-null object

25 CHANNEL_TYPE 1670214 non-null object

26 SELLERPLACE_AREA 1670214 non-null int64

27 NAME_SELLER_INDUSTRY 1670214 non-null object

28 CNT_PAYMENT 1297984 non-null float64

29 NAME_YIELD_GROUP 1670214 non-null object

30 PRODUCT_COMBINATION 1669868 non-null object

31 DAYS_FIRST_DRAWING 997149 non-null float64

32 DAYS_FIRST_DUE 997149 non-null float64

33 DAYS_LAST_DUE_1ST_VERSION 997149 non-null float64

34 DAYS_LAST_DUE 997149 non-null float64

35 DAYS_TERMINATION 997149 non-null float64

36 NFLAG_INSURED_ON_APPROVAL 997149 non-null float64

dtypes: float64(15), int64(6), object(16)

memory usage: 471.5+ MB

# checking for missing values

prev_appln.isnull().sum()

SK_ID_PREV 0

SK_ID_CURR 0

NAME_CONTRACT_TYPE 0

AMT_ANNUITY 372235

AMT_APPLICATION 0

AMT_CREDIT 1

AMT_DOWN_PAYMENT 895844

AMT_GOODS_PRICE 385515

WEEKDAY_APPR_PROCESS_START 0

HOUR_APPR_PROCESS_START 0

FLAG_LAST_APPL_PER_CONTRACT 0

NFLAG_LAST_APPL_IN_DAY 0

RATE_DOWN_PAYMENT 895844

RATE_INTEREST_PRIMARY 1664263

RATE_INTEREST_PRIVILEGED 1664263

NAME_CASH_LOAN_PURPOSE 0

NAME_CONTRACT_STATUS 0

DAYS_DECISION 0

NAME_PAYMENT_TYPE 0

CODE_REJECT_REASON 0

NAME_TYPE_SUITE 820405

NAME_CLIENT_TYPE 0

NAME_GOODS_CATEGORY 0

NAME_PORTFOLIO 0

NAME_PRODUCT_TYPE 0

CHANNEL_TYPE 0

SELLERPLACE_AREA 0

NAME_SELLER_INDUSTRY 0

CNT_PAYMENT 372230

NAME_YIELD_GROUP 0

PRODUCT_COMBINATION 346

DAYS_FIRST_DRAWING 673065

DAYS_FIRST_DUE 673065

DAYS_LAST_DUE_1ST_VERSION 673065

DAYS_LAST_DUE 673065

DAYS_TERMINATION 673065

NFLAG_INSURED_ON_APPROVAL 673065

dtype: int64

# Finding the % of missing values

round(100*(prev_appln.isnull().sum()/len(prev_appln.index)),2)

SK_ID_PREV 0.000000

SK_ID_CURR 0.000000

NAME_CONTRACT_TYPE 0.000000

AMT_ANNUITY 22.290000

AMT_APPLICATION 0.000000

AMT_CREDIT 0.000000

AMT_DOWN_PAYMENT 53.640000

AMT_GOODS_PRICE 23.080000

WEEKDAY_APPR_PROCESS_START 0.000000

HOUR_APPR_PROCESS_START 0.000000

FLAG_LAST_APPL_PER_CONTRACT 0.000000

NFLAG_LAST_APPL_IN_DAY 0.000000

RATE_DOWN_PAYMENT 53.640000

RATE_INTEREST_PRIMARY 99.640000

RATE_INTEREST_PRIVILEGED 99.640000

NAME_CASH_LOAN_PURPOSE 0.000000

NAME_CONTRACT_STATUS 0.000000

DAYS_DECISION 0.000000

NAME_PAYMENT_TYPE 0.000000

CODE_REJECT_REASON 0.000000

NAME_TYPE_SUITE 49.120000

NAME_CLIENT_TYPE 0.000000

NAME_GOODS_CATEGORY 0.000000

NAME_PORTFOLIO 0.000000

NAME_PRODUCT_TYPE 0.000000

CHANNEL_TYPE 0.000000

SELLERPLACE_AREA 0.000000

NAME_SELLER_INDUSTRY 0.000000

CNT_PAYMENT 22.290000

NAME_YIELD_GROUP 0.000000

PRODUCT_COMBINATION 0.020000

DAYS_FIRST_DRAWING 40.300000

DAYS_FIRST_DUE 40.300000

DAYS_LAST_DUE_1ST_VERSION 40.300000

DAYS_LAST_DUE 40.300000

DAYS_TERMINATION 40.300000

NFLAG_INSURED_ON_APPROVAL 40.300000

dtype: float64

#Assigning NULL percentage value to variable

prevapp_null = round(100*(prev_appln.isnull().sum()/len(prev_appln.index)),2)

# find columns with more than 50% missing values

columnprev = prevapp_null[prevapp_null >= 50].index

# drop columns with high null percentage

prev_appln.drop(columnprev,axis = 1,inplace = True)

#check null percentage after dropping

round(100*(prev_appln.isnull().sum()/len(prev_appln.index)),2)

SK_ID_PREV 0.000000

SK_ID_CURR 0.000000

NAME_CONTRACT_TYPE 0.000000

AMT_ANNUITY 22.290000

AMT_APPLICATION 0.000000

AMT_CREDIT 0.000000

AMT_GOODS_PRICE 23.080000

WEEKDAY_APPR_PROCESS_START 0.000000

HOUR_APPR_PROCESS_START 0.000000

FLAG_LAST_APPL_PER_CONTRACT 0.000000

NFLAG_LAST_APPL_IN_DAY 0.000000

NAME_CASH_LOAN_PURPOSE 0.000000

NAME_CONTRACT_STATUS 0.000000

DAYS_DECISION 0.000000

NAME_PAYMENT_TYPE 0.000000

CODE_REJECT_REASON 0.000000

NAME_TYPE_SUITE 49.120000

NAME_CLIENT_TYPE 0.000000

NAME_GOODS_CATEGORY 0.000000

NAME_PORTFOLIO 0.000000

NAME_PRODUCT_TYPE 0.000000

CHANNEL_TYPE 0.000000

SELLERPLACE_AREA 0.000000

NAME_SELLER_INDUSTRY 0.000000

CNT_PAYMENT 22.290000

NAME_YIELD_GROUP 0.000000

PRODUCT_COMBINATION 0.020000

DAYS_FIRST_DRAWING 40.300000

DAYS_FIRST_DUE 40.300000

DAYS_LAST_DUE_1ST_VERSION 40.300000

DAYS_LAST_DUE 40.300000

DAYS_TERMINATION 40.300000

NFLAG_INSURED_ON_APPROVAL 40.300000

dtype: float64

# checking the shape of the dataframe after the columns are dropped

prev_appln.shape

(1670214, 33)

4 columns have been dropped.

#getting the list of columns that have missing values > 0

null_count = prev_appln.isnull().sum()

null_ap = null_count[null_count > 0]

null_ap

AMT_ANNUITY 372235

AMT_CREDIT 1

AMT_GOODS_PRICE 385515

NAME_TYPE_SUITE 820405

CNT_PAYMENT 372230

PRODUCT_COMBINATION 346

DAYS_FIRST_DRAWING 673065

DAYS_FIRST_DUE 673065

DAYS_LAST_DUE_1ST_VERSION 673065

DAYS_LAST_DUE 673065

DAYS_TERMINATION 673065

NFLAG_INSURED_ON_APPROVAL 673065

dtype: int64

prev_appln.describe().T

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| SK_ID_PREV | 1670214.000000 | 1923089.135331 | 532597.958696 | 1000001.000000 | 1461857.250000 | 1923110.500000 | 2384279.750000 | 2845382.000000 |

| SK_ID_CURR | 1670214.000000 | 278357.174099 | 102814.823849 | 100001.000000 | 189329.000000 | 278714.500000 | 367514.000000 | 456255.000000 |

| AMT_ANNUITY | 1297979.000000 | 15955.120659 | 14782.137335 | 0.000000 | 6321.780000 | 11250.000000 | 20658.420000 | 418058.145000 |

| AMT_APPLICATION | 1670214.000000 | 175233.860360 | 292779.762387 | 0.000000 | 18720.000000 | 71046.000000 | 180360.000000 | 6905160.000000 |

| AMT_CREDIT | 1670213.000000 | 196114.021218 | 318574.616546 | 0.000000 | 24160.500000 | 80541.000000 | 216418.500000 | 6905160.000000 |

| AMT_GOODS_PRICE | 1284699.000000 | 227847.279283 | 315396.557937 | 0.000000 | 50841.000000 | 112320.000000 | 234000.000000 | 6905160.000000 |

| HOUR_APPR_PROCESS_START | 1670214.000000 | 12.484182 | 3.334028 | 0.000000 | 10.000000 | 12.000000 | 15.000000 | 23.000000 |

| NFLAG_LAST_APPL_IN_DAY | 1670214.000000 | 0.996468 | 0.059330 | 0.000000 | 1.000000 | 1.000000 | 1.000000 | 1.000000 |

| DAYS_DECISION | 1670214.000000 | -880.679668 | 779.099667 | -2922.000000 | -1300.000000 | -581.000000 | -280.000000 | -1.000000 |

| SELLERPLACE_AREA | 1670214.000000 | 313.951115 | 7127.443459 | -1.000000 | -1.000000 | 3.000000 | 82.000000 | 4000000.000000 |

| CNT_PAYMENT | 1297984.000000 | 16.054082 | 14.567288 | 0.000000 | 6.000000 | 12.000000 | 24.000000 | 84.000000 |

| DAYS_FIRST_DRAWING | 997149.000000 | 342209.855039 | 88916.115834 | -2922.000000 | 365243.000000 | 365243.000000 | 365243.000000 | 365243.000000 |

| DAYS_FIRST_DUE | 997149.000000 | 13826.269337 | 72444.869708 | -2892.000000 | -1628.000000 | -831.000000 | -411.000000 | 365243.000000 |

| DAYS_LAST_DUE_1ST_VERSION | 997149.000000 | 33767.774054 | 106857.034789 | -2801.000000 | -1242.000000 | -361.000000 | 129.000000 | 365243.000000 |

| DAYS_LAST_DUE | 997149.000000 | 76582.403064 | 149647.415123 | -2889.000000 | -1314.000000 | -537.000000 | -74.000000 | 365243.000000 |

| DAYS_TERMINATION | 997149.000000 | 81992.343838 | 153303.516729 | -2874.000000 | -1270.000000 | -499.000000 | -44.000000 | 365243.000000 |

| NFLAG_INSURED_ON_APPROVAL | 997149.000000 | 0.332570 | 0.471134 | 0.000000 | 0.000000 | 0.000000 | 1.000000 | 1.000000 |

DAYS_DECISION, SELLERPLACE_AREA, DAYS_FIRST_DRAWING, DAYS_FIRST_DUE, DAYS_LAST_DUE_1ST_VERSION, DAYS_LAST_DUE, DAYS_TERMINATION have negative values.

Description of The Dataset:

SK_ID_PREV → ID of previous credit in Home Credit related to loan in our sample. (One loan in our sample can have 0,1,2 or more previous loans in Home Credit).

SK_ID_CURR → ID of loan in our sample.

NAME_CONTRACT_TYPE → Identification if loan is cash or revolving.

AMT_ANNUITY → Loan annuity.

AMT_APPLICATION → For how much credit did client ask on the previous application.

AMT_CREDIT → Credit amount of the loan.

AMT_GOODS_PRICE → For consumer loans it is the price of the goods for which the loan is given.

WEEKDAY_APPR_PROCESS_START → On which day of the week did the client apply for the loan.

HOUR_APPR_PROCESS_START → Approximately at what hour did the client apply for the loan.

FLAG_LAST_APPL_PER_CONTRACT → Flag if it was last application for the previous contract. Sometimes by mistake of client or our clerk there could be more applications for one single contract.

NFLAG_LAST_APPL_IN_DAY → Flag if the application was the last application per day of the client. Sometimes clients apply for more applications a day. Rarely it could also be error in our system that one application is in the database twice.

NAME_CASH_LOAN_PURPOSE → Purpose of the cash loan.

NAME_CONTRACT_STATUS → Contract status during the month.

DAYS_DECISION → Relative to current application when was the decision about previous application made.

NAME_PAYMENT_TYPE → Payment method that client chose to pay for the previous application.

CODE_REJECT_REASON → Why was the previous application rejected.

NAME_TYPE_SUITE Who was accompanying client when he was applying for the loan.

NAME_CLIENT_TYPE → Was the client old or new client when applying for the previous application.

NAME_GOODS_CATEGORY → What kind of goods did the client apply for in the previous application.

NAME_PORTFOLIO → Was the previous application for CASH, POS, CAR, …

NAME_PRODUCT_TYPE → Was the previous application x-sell o walk-in.

CHANNEL_TYPE → Through which channel we acquired the client on the previous application.

SELLERPLACE_AREA → Selling area of seller place of the previous application.

NAME_SELLER_INDUSTRY → The industry of the seller.

CNT_PAYMENT → Term of previous credit at application of the previous application.

NAME_YIELD_GROUP → Grouped interest rate into small medium and high of the previous application.

PRODUCT_COMBINATION → Detailed product combination of the previous application.

DAYS_FIRST_DRAWING → Relative to application date of current application when was the first disbursement of the previous application.

DAYS_FIRST_DUE → Relative to application date of current application when was the first due supposed to be of the previous application.

DAYS_LAST_DUE_1ST_VERSION → Relative to application date of current application when was the first due of the previous application.

DAYS_LAST_DUE → Relative to application date of current application when was the last due date of the previous application.

DAYS_TERMINATION → Relative to application date of current application when was the expected termination of the previous application. Description of the data set.

SK_ID_PREV → ID of previous credit in Home Credit related to loan in our sample. (One loan in our sample can have 0,1,2 or more previous loans in Home Credit).

SK_ID_CURR → ID of loan in our sample.

NAME_CONTRACT_TYPE Identification if loan is cash or revolving.

AMT_ANNUITY → Loan annuity.

AMT_APPLICATION → For how much credit did client ask on the previous application.

AMT_CREDIT → Credit amount of the loan.

AMT_GOODS_PRICE → For consumer loans it is the price of the goods for which the loan is given.

WEEKDAY_APPR_PROCESS_START → On which day of the week did the client apply for the loan.

HOUR_APPR_PROCESS_START → Approximately at what hour did the client apply for the loan.

FLAG_LAST_APPL_PER_CONTRACT → Flag if it was last application for the previous contract. Sometimes by mistake of client or our clerk there could be more applications for one single contract.

NFLAG_LAST_APPL_IN_DAY → Flag if the application was the last application per day of the client. Sometimes clients apply for more applications a day. Rarely it could also be error in our system that one application is in the database twice.

NAME_CASH_LOAN_PURPOSE → Purpose of the cash loan.

NAME_CONTRACT_STATUS → Contract status during the month.

DAYS_DECISION → Relative to current application when was the decision about previous application made.

NAME_PAYMENT_TYPE → Payment method that client chose to pay for the previous application.

CODE_REJECT_REASON → Why was the previous application rejected.

NAME_TYPE_SUITE → Who was accompanying client when he was applying for the loan.

NAME_CLIENT_TYPE → Was the client old or new client when applying for the previous application.

NAME_GOODS_CATEGORY → What kind of goods did the client apply for in the previous application.

NAME_PORTFOLIO → Was the previous application for CASH, POS, CAR, …

NAME_PRODUCT_TYPE → Was the previous application x-sell o walk-in.

CHANNEL_TYPE → Through which channel we acquired the client on the previous application.

SELLERPLACE_AREA → Selling area of seller place of the previous application.

NAME_SELLER_INDUSTRY → The industry of the seller.

CNT_PAYMENT → Term of previous credit at application of the previous application.

NAME_YIELD_GROUP → Grouped interest rate into small medium and high of the previous application.

PRODUCT_COMBINATION → Detailed product combination of the previous application.

DAYS_FIRST_DRAWING → Relative to application date of current application when was the first disbursement of the previous application.

DAYS_FIRST_DUE → Relative to application date of current application when was the first due supposed to be of the previous application.

DAYS_LAST_DUE_1ST_VERSION → Relative to application date of current application when was the first due of the previous application.

DAYS_LAST_DUE → Relative to application date of current application when was the last due date of the previous application.

DAYS_TERMINATION → Relative to application date of current application when was the expected termination of the previous application.

NFLAG_INSURED_ON_APPROVAL → Did the client requested insurance during the previous application.

NFLAG_INSURED_ON_APPROVAL → Did the client requested insurance during the previous application.

# Replacing the missing values for the columns

# For the numerical values, replacing the missing values with mean of their respective columns

prev_appln['AMT_ANNUITY'].fillna(prev_appln['AMT_ANNUITY'].mean(), inplace = True)

prev_appln['AMT_CREDIT'].fillna(prev_appln['AMT_CREDIT'].mean(), inplace = True)

prev_appln['AMT_GOODS_PRICE'].fillna(prev_appln['AMT_GOODS_PRICE'].mean(), inplace = True)

prev_appln['CNT_PAYMENT'].fillna(prev_appln['CNT_PAYMENT'].mean(), inplace = True)

prev_appln['DAYS_FIRST_DRAWING'].fillna(prev_appln['DAYS_FIRST_DRAWING'].mean(), inplace = True)

prev_appln['DAYS_FIRST_DUE'].fillna(prev_appln['DAYS_FIRST_DUE'].mean(), inplace = True)

prev_appln['DAYS_LAST_DUE_1ST_VERSION'].fillna(prev_appln['DAYS_LAST_DUE_1ST_VERSION'].mean(), inplace = True)

prev_appln['DAYS_LAST_DUE'].fillna(prev_appln['DAYS_LAST_DUE'].mean(), inplace = True)

prev_appln['DAYS_TERMINATION'].fillna(prev_appln['DAYS_TERMINATION'].mean(), inplace = True)

prev_appln['NFLAG_INSURED_ON_APPROVAL'].fillna(prev_appln['NFLAG_INSURED_ON_APPROVAL'].mean(), inplace = True)

# For the categorical values, replacing the missing values with most frequently appearing values

# Getting the mode of the categorical columns and for no of family members

print(prev_appln['NAME_TYPE_SUITE'].mode())

print(prev_appln['PRODUCT_COMBINATION'].mode())

0 Unaccompanied

dtype: object

0 Cash

dtype: object

# Replacing the missing values for the below with the most frequently appearing values from above

prev_appln.loc[pd.isnull(prev_appln['NAME_TYPE_SUITE']),'NAME_TYPE_SUITE'] = "Unaccompanied"

prev_appln.loc[pd.isnull(prev_appln['PRODUCT_COMBINATION']),'PRODUCT_COMBINATION'] = "Cash"

prev_appln.isnull().sum()

SK_ID_PREV 0

SK_ID_CURR 0

NAME_CONTRACT_TYPE 0

AMT_ANNUITY 0

AMT_APPLICATION 0

AMT_CREDIT 0

AMT_GOODS_PRICE 0

WEEKDAY_APPR_PROCESS_START 0

HOUR_APPR_PROCESS_START 0

FLAG_LAST_APPL_PER_CONTRACT 0

NFLAG_LAST_APPL_IN_DAY 0

NAME_CASH_LOAN_PURPOSE 0

NAME_CONTRACT_STATUS 0

DAYS_DECISION 0

NAME_PAYMENT_TYPE 0

CODE_REJECT_REASON 0

NAME_TYPE_SUITE 0

NAME_CLIENT_TYPE 0

NAME_GOODS_CATEGORY 0

NAME_PORTFOLIO 0

NAME_PRODUCT_TYPE 0

CHANNEL_TYPE 0

SELLERPLACE_AREA 0

NAME_SELLER_INDUSTRY 0

CNT_PAYMENT 0

NAME_YIELD_GROUP 0

PRODUCT_COMBINATION 0

DAYS_FIRST_DRAWING 0

DAYS_FIRST_DUE 0

DAYS_LAST_DUE_1ST_VERSION 0

DAYS_LAST_DUE 0

DAYS_TERMINATION 0

NFLAG_INSURED_ON_APPROVAL 0

dtype: int64

#Checking the no. of unique SK_ID_CURR values

count3 = prev_appln["SK_ID_CURR"].unique()

count3.shape

(338857,)

For each unique SK_ID_CURR there are duplicate rows that provide the data for multiple dates so we need to keep only that row that has the most recent information and drop the old information. In this dataset we will keep only those rows that have the max DAYS_DECISION which is Relative to current application when was the decision about previous application made(-1 means the freshest balance date)) and delete the other rows for each unique SK_ID_CURR.

prev_appln = prev_appln.groupby('SK_ID_CURR', group_keys=False).apply(lambda x: x.loc[x.DAYS_DECISION.idxmax()])

prev_appln.shape

(338857, 33)

prev_appln['index'] = prev_appln.index

prev_appln.index.name = None

prev_appln.drop(['SK_ID_PREV', 'index'], axis = 1, inplace = True)

prev_appln.head()

| SK_ID_CURR | NAME_CONTRACT_TYPE | AMT_ANNUITY | AMT_APPLICATION | AMT_CREDIT | AMT_GOODS_PRICE | WEEKDAY_APPR_PROCESS_START | HOUR_APPR_PROCESS_START | FLAG_LAST_APPL_PER_CONTRACT | NFLAG_LAST_APPL_IN_DAY | NAME_CASH_LOAN_PURPOSE | NAME_CONTRACT_STATUS | DAYS_DECISION | NAME_PAYMENT_TYPE | CODE_REJECT_REASON | NAME_TYPE_SUITE | NAME_CLIENT_TYPE | NAME_GOODS_CATEGORY | NAME_PORTFOLIO | NAME_PRODUCT_TYPE | CHANNEL_TYPE | SELLERPLACE_AREA | NAME_SELLER_INDUSTRY | CNT_PAYMENT | NAME_YIELD_GROUP | PRODUCT_COMBINATION | DAYS_FIRST_DRAWING | DAYS_FIRST_DUE | DAYS_LAST_DUE_1ST_VERSION | DAYS_LAST_DUE | DAYS_TERMINATION | NFLAG_INSURED_ON_APPROVAL | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 100001 | 100001 | Consumer loans | 3951.000000 | 24835.500000 | 23787.000000 | 24835.500000 | FRIDAY | 13 | Y | 1 | XAP | Approved | -1740 | Cash through the bank | XAP | Family | Refreshed | Mobile | POS | XNA | Country-wide | 23 | Connectivity | 8.000000 | high | POS mobile with interest | 365243.000000 | -1709.000000 | -1499.000000 | -1619.000000 | -1612.000000 | 0.000000 |

| 100002 | 100002 | Consumer loans | 9251.775000 | 179055.000000 | 179055.000000 | 179055.000000 | SATURDAY | 9 | Y | 1 | XAP | Approved | -606 | XNA | XAP | Unaccompanied | New | Vehicles | POS | XNA | Stone | 500 | Auto technology | 24.000000 | low_normal | POS other with interest | 365243.000000 | -565.000000 | 125.000000 | -25.000000 | -17.000000 | 0.000000 |

| 100003 | 100003 | Cash loans | 98356.995000 | 900000.000000 | 1035882.000000 | 900000.000000 | FRIDAY | 12 | Y | 1 | XNA | Approved | -746 | XNA | XAP | Unaccompanied | Repeater | XNA | Cash | x-sell | Credit and cash offices | -1 | XNA | 12.000000 | low_normal | Cash X-Sell: low | 365243.000000 | -716.000000 | -386.000000 | -536.000000 | -527.000000 | 1.000000 |

| 100004 | 100004 | Consumer loans | 5357.250000 | 24282.000000 | 20106.000000 | 24282.000000 | FRIDAY | 5 | Y | 1 | XAP | Approved | -815 | Cash through the bank | XAP | Unaccompanied | New | Mobile | POS | XNA | Regional / Local | 30 | Connectivity | 4.000000 | middle | POS mobile without interest | 365243.000000 | -784.000000 | -694.000000 | -724.000000 | -714.000000 | 0.000000 |

| 100005 | 100005 | Cash loans | 15955.120659 | 0.000000 | 0.000000 | 227847.279283 | FRIDAY | 10 | Y | 1 | XNA | Canceled | -315 | XNA | XAP | Unaccompanied | Repeater | XNA | XNA | XNA | Credit and cash offices | -1 | XNA | 16.054082 | XNA | Cash | 342209.855039 | 13826.269337 | 33767.774054 | 76582.403064 | 81992.343838 | 0.332570 |

The previous_application data set is now clean with no missing values and ready to be merged with the other datasets.

STEP 5: Loading and preparing Application_train dataset

#Loading the dataset

app_train = pd.read_csv('/content/grive/MyDrive/HomeLoanDefault/application_train.csv')

app_train.head().T

| 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|

| SK_ID_CURR | 100002 | 100003 | 100004 | 100006 | 100007 |

| TARGET | 1 | 0 | 0 | 0 | 0 |

| NAME_CONTRACT_TYPE | Cash loans | Cash loans | Revolving loans | Cash loans | Cash loans |

| CODE_GENDER | M | F | M | F | M |

| FLAG_OWN_CAR | N | N | Y | N | N |

| FLAG_OWN_REALTY | Y | N | Y | Y | Y |

| CNT_CHILDREN | 0 | 0 | 0 | 0 | 0 |

| AMT_INCOME_TOTAL | 202500.000000 | 270000.000000 | 67500.000000 | 135000.000000 | 121500.000000 |

| AMT_CREDIT | 406597.500000 | 1293502.500000 | 135000.000000 | 312682.500000 | 513000.000000 |

| AMT_ANNUITY | 24700.500000 | 35698.500000 | 6750.000000 | 29686.500000 | 21865.500000 |

| AMT_GOODS_PRICE | 351000.000000 | 1129500.000000 | 135000.000000 | 297000.000000 | 513000.000000 |

| NAME_TYPE_SUITE | Unaccompanied | Family | Unaccompanied | Unaccompanied | Unaccompanied |

| NAME_INCOME_TYPE | Working | State servant | Working | Working | Working |

| NAME_EDUCATION_TYPE | Secondary / secondary special | Higher education | Secondary / secondary special | Secondary / secondary special | Secondary / secondary special |

| NAME_FAMILY_STATUS | Single / not married | Married | Single / not married | Civil marriage | Single / not married |

| NAME_HOUSING_TYPE | House / apartment | House / apartment | House / apartment | House / apartment | House / apartment |

| REGION_POPULATION_RELATIVE | 0.018801 | 0.003541 | 0.010032 | 0.008019 | 0.028663 |

| DAYS_BIRTH | -9461 | -16765 | -19046 | -19005 | -19932 |

| DAYS_EMPLOYED | -637 | -1188 | -225 | -3039 | -3038 |

| DAYS_REGISTRATION | -3648.000000 | -1186.000000 | -4260.000000 | -9833.000000 | -4311.000000 |

| DAYS_ID_PUBLISH | -2120 | -291 | -2531 | -2437 | -3458 |

| OWN_CAR_AGE | NaN | NaN | 26.000000 | NaN | NaN |

| FLAG_MOBIL | 1 | 1 | 1 | 1 | 1 |

| FLAG_EMP_PHONE | 1 | 1 | 1 | 1 | 1 |

| FLAG_WORK_PHONE | 0 | 0 | 1 | 0 | 0 |

| FLAG_CONT_MOBILE | 1 | 1 | 1 | 1 | 1 |

| FLAG_PHONE | 1 | 1 | 1 | 0 | 0 |

| FLAG_EMAIL | 0 | 0 | 0 | 0 | 0 |

| OCCUPATION_TYPE | Laborers | Core staff | Laborers | Laborers | Core staff |

| CNT_FAM_MEMBERS | 1.000000 | 2.000000 | 1.000000 | 2.000000 | 1.000000 |

| REGION_RATING_CLIENT | 2 | 1 | 2 | 2 | 2 |

| REGION_RATING_CLIENT_W_CITY | 2 | 1 | 2 | 2 | 2 |

| WEEKDAY_APPR_PROCESS_START | WEDNESDAY | MONDAY | MONDAY | WEDNESDAY | THURSDAY |

| HOUR_APPR_PROCESS_START | 10 | 11 | 9 | 17 | 11 |

| REG_REGION_NOT_LIVE_REGION | 0 | 0 | 0 | 0 | 0 |

| REG_REGION_NOT_WORK_REGION | 0 | 0 | 0 | 0 | 0 |

| LIVE_REGION_NOT_WORK_REGION | 0 | 0 | 0 | 0 | 0 |

| REG_CITY_NOT_LIVE_CITY | 0 | 0 | 0 | 0 | 0 |

| REG_CITY_NOT_WORK_CITY | 0 | 0 | 0 | 0 | 1 |

| LIVE_CITY_NOT_WORK_CITY | 0 | 0 | 0 | 0 | 1 |

| ORGANIZATION_TYPE | Business Entity Type 3 | School | Government | Business Entity Type 3 | Religion |

| EXT_SOURCE_1 | 0.083037 | 0.311267 | NaN | NaN | NaN |

| EXT_SOURCE_2 | 0.262949 | 0.622246 | 0.555912 | 0.650442 | 0.322738 |

| EXT_SOURCE_3 | 0.139376 | NaN | 0.729567 | NaN | NaN |

| APARTMENTS_AVG | 0.024700 | 0.095900 | NaN | NaN | NaN |

| BASEMENTAREA_AVG | 0.036900 | 0.052900 | NaN | NaN | NaN |

| YEARS_BEGINEXPLUATATION_AVG | 0.972200 | 0.985100 | NaN | NaN | NaN |

| YEARS_BUILD_AVG | 0.619200 | 0.796000 | NaN | NaN | NaN |

| COMMONAREA_AVG | 0.014300 | 0.060500 | NaN | NaN | NaN |

| ELEVATORS_AVG | 0.000000 | 0.080000 | NaN | NaN | NaN |

| ENTRANCES_AVG | 0.069000 | 0.034500 | NaN | NaN | NaN |

| FLOORSMAX_AVG | 0.083300 | 0.291700 | NaN | NaN | NaN |

| FLOORSMIN_AVG | 0.125000 | 0.333300 | NaN | NaN | NaN |

| LANDAREA_AVG | 0.036900 | 0.013000 | NaN | NaN | NaN |

| LIVINGAPARTMENTS_AVG | 0.020200 | 0.077300 | NaN | NaN | NaN |

| LIVINGAREA_AVG | 0.019000 | 0.054900 | NaN | NaN | NaN |

| NONLIVINGAPARTMENTS_AVG | 0.000000 | 0.003900 | NaN | NaN | NaN |

| NONLIVINGAREA_AVG | 0.000000 | 0.009800 | NaN | NaN | NaN |

| APARTMENTS_MODE | 0.025200 | 0.092400 | NaN | NaN | NaN |

| BASEMENTAREA_MODE | 0.038300 | 0.053800 | NaN | NaN | NaN |

| YEARS_BEGINEXPLUATATION_MODE | 0.972200 | 0.985100 | NaN | NaN | NaN |

| YEARS_BUILD_MODE | 0.634100 | 0.804000 | NaN | NaN | NaN |

| COMMONAREA_MODE | 0.014400 | 0.049700 | NaN | NaN | NaN |

| ELEVATORS_MODE | 0.000000 | 0.080600 | NaN | NaN | NaN |

| ENTRANCES_MODE | 0.069000 | 0.034500 | NaN | NaN | NaN |

| FLOORSMAX_MODE | 0.083300 | 0.291700 | NaN | NaN | NaN |

| FLOORSMIN_MODE | 0.125000 | 0.333300 | NaN | NaN | NaN |

| LANDAREA_MODE | 0.037700 | 0.012800 | NaN | NaN | NaN |

| LIVINGAPARTMENTS_MODE | 0.022000 | 0.079000 | NaN | NaN | NaN |

| LIVINGAREA_MODE | 0.019800 | 0.055400 | NaN | NaN | NaN |

| NONLIVINGAPARTMENTS_MODE | 0.000000 | 0.000000 | NaN | NaN | NaN |

| NONLIVINGAREA_MODE | 0.000000 | 0.000000 | NaN | NaN | NaN |

| APARTMENTS_MEDI | 0.025000 | 0.096800 | NaN | NaN | NaN |

| BASEMENTAREA_MEDI | 0.036900 | 0.052900 | NaN | NaN | NaN |

| YEARS_BEGINEXPLUATATION_MEDI | 0.972200 | 0.985100 | NaN | NaN | NaN |

| YEARS_BUILD_MEDI | 0.624300 | 0.798700 | NaN | NaN | NaN |

| COMMONAREA_MEDI | 0.014400 | 0.060800 | NaN | NaN | NaN |

| ELEVATORS_MEDI | 0.000000 | 0.080000 | NaN | NaN | NaN |

| ENTRANCES_MEDI | 0.069000 | 0.034500 | NaN | NaN | NaN |

| FLOORSMAX_MEDI | 0.083300 | 0.291700 | NaN | NaN | NaN |

| FLOORSMIN_MEDI | 0.125000 | 0.333300 | NaN | NaN | NaN |

| LANDAREA_MEDI | 0.037500 | 0.013200 | NaN | NaN | NaN |

| LIVINGAPARTMENTS_MEDI | 0.020500 | 0.078700 | NaN | NaN | NaN |

| LIVINGAREA_MEDI | 0.019300 | 0.055800 | NaN | NaN | NaN |

| NONLIVINGAPARTMENTS_MEDI | 0.000000 | 0.003900 | NaN | NaN | NaN |

| NONLIVINGAREA_MEDI | 0.000000 | 0.010000 | NaN | NaN | NaN |

| FONDKAPREMONT_MODE | reg oper account | reg oper account | NaN | NaN | NaN |

| HOUSETYPE_MODE | block of flats | block of flats | NaN | NaN | NaN |

| TOTALAREA_MODE | 0.014900 | 0.071400 | NaN | NaN | NaN |

| WALLSMATERIAL_MODE | Stone, brick | Block | NaN | NaN | NaN |

| EMERGENCYSTATE_MODE | No | No | NaN | NaN | NaN |